What is Data Partitioning?

For databases with extremely large tables, partitioning is a wonderful and crafty trick for database designers to improve database performance and make maintenance much easier. The maximum table size allowed in a PostgreSQL database is 32TB, however unless it’s running on a not-yet-invented computer from the future, performance issues may arise on a table with only a hundredth of that space.

Partitioning splits a table into multiple tables, and generally is done in a way that applications accessing the table don’t notice any difference, other than being faster to access the data that it needs. By splitting the table into multiple tables, the idea is to allow the execution of the queries to have to scan much smaller tables and indexes to find the data needed. Regardless of how efficient an index strategy is, scanning an index for a table that’s 50GB will always be much faster than an index that’s for a table at 500GB. This applies to table scans as well, because sometimes table scans are just unavoidable.

When introducing a partitioned table to the query planner, there are a few things to know and understand about the query planner itself. Before any query is actually executed, the query planner will take the query and plan out the most efficient way it will access the data. By having the data split up across different tables, the planner can decide what tables to access, and what tables to completely ignore, based on what each table contains.

This is done by adding constraints to the split up tables that define what data is allowed in each table, and with a good design, we can have the query planner scan a small subset of data rather than the whole thing.

Should A Table Be Partitioned?

Partitioning can drastically improve performance on a table when done right, but if done wrong or when not needed, it can make performance worse, even unusable.

How big is the table?

There is no real hardline rule for how big a table must be before partitioning is an option, but based on database access trends, database users and administrators will start to see performance on a specific table start to degrade as it gets bigger. In general, partitioning should only be considered when someone says “I can’t do X because the table is too big.” For some hosts, 200 GB could be the right time to partition, for others, it may be time to partition when it hits 1TB.

If the table is determined to be “too big”, it’s time to look at the access patterns. Either by knowing the applications that access the database, or by monitoring logs and generating query reports with something like pgBadger, we can see how a table is accessed, and depending on how it’s accessed, we can have options for a good partitioning strategy.

To learn more about pgBadger and how to use it, please check out our previous article about pgBadger.

Is table bloat an issue?

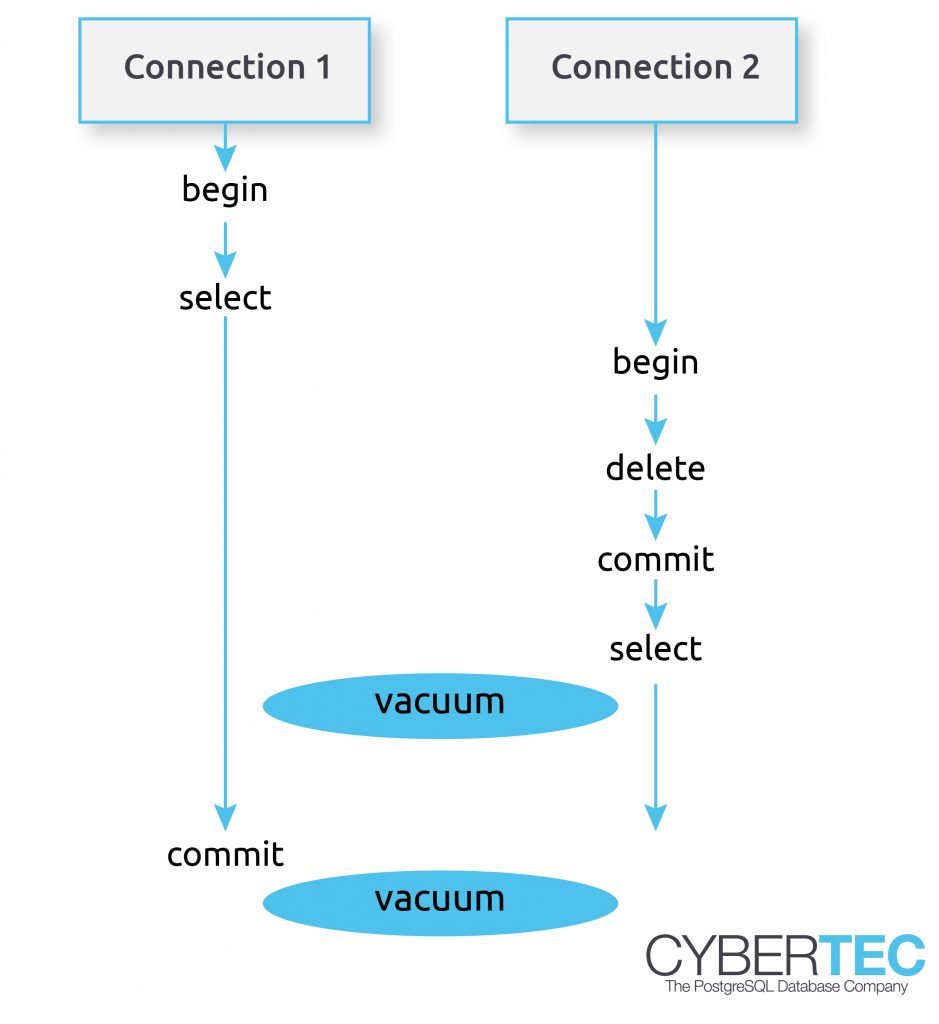

Updated and deleted rows results in dead tuples that ultimately need to be cleaned up. Vacuuming tables, whether manually or automatically, goes over every row in the table and determines if it is to be reclaimed or left alone. The larger the table, the longer this process takes, and the more system resources used. Even if 90% of a table is unchanging data, it must be scanned each time a vacuum is run. Partitioning the table can help reduce the table that needs vacuuming to smaller ones, reducing the amount of unchanging data needing to be scanned, less time vacuuming overall, and more system resources freed up for user access rather than system maintenance.

How is data deleted, if at all?

If data is deleted on a schedule, say data older than 4 years get deleted and archived, this could result in heavy hitting delete statements that can take time to run, and as mentioned before, creating dead rows that need to be vacuumed. If a good partitioning strategy is implemented, a multi- hour DELETE statement with vacuuming maintenance afterward could be turned into a one minute DROP TABLE statement on a old monthly table with zero vacuum maintenance.

How Should The Table Be Partitioned?

The keys for access patterns are in the WHERE clause and JOIN conditions. Any time a query specifies columns in the WHERE and JOIN clauses, it tells the database “this is the data I want”. Much like designing indexes that target these clauses, partitioning strategies rely on targeting these columns to separate data and have the query access as few partitions as possible.

Examples:

- A transaction table, with a date column that is always used in a where clause.

- A customer table with location columns, such as country of residence that is always used in where clauses.

The most common columns to focus on for partitioning are usually timestamps, since usually a huge chunk of data is historical information, and likely will have a rather predictable data spread across different time groupings.

Determine the Data Spread

Once we identify which columns to partition on we should take a look at the spread of data, with the goal of creating partition sizes that spread the data as evenly as possible across the different child partitions.

severalnines=# SELECT DATE_TRUNC('year', view_date)::DATE, COUNT(*) FROM website_views GROUP BY 1 ORDER BY 1;

date_trunc | count

------------+----------

2013-01-01 | 11625147

2014-01-01 | 20819125

2015-01-01 | 20277739

2016-01-01 | 20584545

2017-01-01 | 20777354

2018-01-01 | 491002

(6 rows)

In this example, we truncate the timestamp column to a yearly table, resulting in about 20 million rows per year. If all of our queries specify a date(s), or date range(s), and those specified usually cover data within a single year, this may be a great starting strategy for partitioning, as it would result in a single table per year, with a manageable number of rows per table.

PostgreSQL Management & Automation with ClusterControl

Learn about what you need to know to deploy, monitor, manage and scale PostgreSQL

Creating a Partitioned Table

There are a couple ways to create partitioned tables, however we will focus mainly on the most feature rich type available, trigger based partitioning. This requires manual setup and a bit of coding in the plpgsql procedural language to get working.

It operates by having a parent table that will ultimately become empty (or remain empty if it’s a new table), and child tables that INHERIT the parent table. When the parent table is queried, the child tables are also searched for data due to the INHERIT applied to the child tables. However, since child tables only contain subsets of the parent’s data, we add a CONSTRAINT on the table that does a CHECK and verifies that the data matches what’s allowed in the table. This does two things: First it refuses data that doesn’t belong, and second it tells the query planner that only data matching this CHECK CONSTRAINT is allowed in this table, so if searching for data that doesn’t match the table, don’t even bother searching it.

Lastly, we apply a trigger to the parent table that executes a stored procedure that decides which child table to put the data.

Create Table

Creating the parent table is like any other table creation.

severalnines=# CREATE TABLE data_log (data_log_sid SERIAL PRIMARY KEY,

date TIMESTAMP WITHOUT TIME ZONE DEFAULT NOW(),

event_details VARCHAR);

CREATE TABLE

Create Child Tables

Creating the child tables are similar, but involve some additions. For organizational sake, we’ll have our child tables exist in a separate schema. Do this for each child table, changing the details accordingly.

NOTE: The name of the sequence used in the nextval() comes from the sequence that the parent created. This is crucial for all child tables to use the same sequence.

severalnines=# CREATE SCHEMA part;

CREATE SCHEMA

severalnines=# CREATE TABLE part.data_log_2018 (data_log_sid integer DEFAULT nextval('public.data_log_data_log_sid_seq'::regclass),

date TIMESTAMP WITHOUT TIME ZONE DEFAULT NOW(),

event_details VARCHAR)

INHERITS (public.data_log);

CREATE TABLE

severalnines=# ALTER TABLE ONLY part.data_log_2018

ADD CONSTRAINT data_log_2018_pkey PRIMARY KEY (data_log_sid);

ALTER TABLE

severalnines=# ALTER TABLE part.data_log_2018 ADD CONSTRAINT data_log_2018_date CHECK (date >= '2018-01-01' AND date < '2017-01-01');

ALTER TABLE

Create Function and Trigger

Finally, we create our stored procedure, and add the trigger to our parent table.

severalnines=# CREATE OR REPLACE FUNCTION

public.insert_trigger_table()

RETURNS trigger

LANGUAGE plpgsql

AS $function$

BEGIN

IF NEW.date >= '2018-01-01' AND NEW.date < '2019-01-01' THEN

INSERT INTO part.data_log_2018 VALUES (NEW.*);

RETURN NULL;

ELSIF NEW.date >= '2019-01-01' AND NEW.date < '2020-01-01' THEN

INSERT INTO part.data_log_2019 VALUES (NEW.*);

RETURN NULL;

END IF;

END;

$function$;

CREATE FUNCTION

severalnines=# CREATE TRIGGER insert_trigger BEFORE INSERT ON data_log FOR EACH ROW EXECUTE PROCEDURE insert_trigger_table();

CREATE TRIGGER

Test it Out

Now that it’s all created, let’s test it. In this test, I’ve added more yearly tables covering 2013 - 2020.

Note: The insert response below is ‘INSERT 0 0’, which would suggest it didn’t insert anything. This will be addressed later in this article.

severalnines=# INSERT INTO data_log (date, event_details) VALUES (now(), 'First insert');

INSERT 0 0

severalnines=# SELECT * FROM data_log WHERE date >= '2018-08-01' AND date < '2018-09-01';

data_log_sid | date | event_details

--------------+----------------------------+---------------

1 | 2018-08-17 23:01:38.324056 | First insert

(1 row)

It exists, but let’s look at the query planner to make sure the row came from the correct child table, and the parent table didn’t return any rows at all.

severalnines=# EXPLAIN ANALYZE SELECT * FROM data_log;

QUERY PLAN

------------------------------------------------------------------------------------------------------------------

Append (cost=0.00..130.12 rows=5813 width=44) (actual time=0.016..0.019 rows=1 loops=1)

-> Seq Scan on data_log (cost=0.00..1.00 rows=1 width=44) (actual time=0.007..0.007 rows=0 loops=1)

-> Seq Scan on data_log_2015 (cost=0.00..21.30 rows=1130 width=44) (actual time=0.001..0.001 rows=0 loops=1)

-> Seq Scan on data_log_2013 (cost=0.00..17.80 rows=780 width=44) (actual time=0.001..0.001 rows=0 loops=1)

-> Seq Scan on data_log_2014 (cost=0.00..17.80 rows=780 width=44) (actual time=0.001..0.001 rows=0 loops=1)

-> Seq Scan on data_log_2016 (cost=0.00..17.80 rows=780 width=44) (actual time=0.001..0.001 rows=0 loops=1)

-> Seq Scan on data_log_2017 (cost=0.00..17.80 rows=780 width=44) (actual time=0.001..0.001 rows=0 loops=1)

-> Seq Scan on data_log_2018 (cost=0.00..1.02 rows=2 width=44) (actual time=0.005..0.005 rows=1 loops=1)

-> Seq Scan on data_log_2019 (cost=0.00..17.80 rows=780 width=44) (actual time=0.001..0.001 rows=0 loops=1)

-> Seq Scan on data_log_2020 (cost=0.00..17.80 rows=780 width=44) (actual time=0.001..0.001 rows=0 loops=1)

Planning time: 0.373 ms

Execution time: 0.069 ms

(12 rows)

Good news, the single row we inserted landed in the 2018 table, where it belongs. But as we can see, the query doesn’t specify a where clause using the date column, so in order to fetch everything, the query planner and execution did a sequential scan on every single table.

Next, let’s test using a where clause.

severalnines=# EXPLAIN ANALYZE SELECT * FROM data_log WHERE date >= '2018-08-01' AND date < '2018-09-01';

QUERY PLAN

------------------------------------------------------------------------------------------------------------------------------------------------

Append (cost=0.00..2.03 rows=2 width=44) (actual time=0.013..0.014 rows=1 loops=1)

-> Seq Scan on data_log (cost=0.00..1.00 rows=1 width=44) (actual time=0.007..0.007 rows=0 loops=1)

Filter: ((date >= '2018-08-01 00:00:00'::timestamp without time zone) AND (date < '2018-09-01 00:00:00'::timestamp without time zone))

-> Seq Scan on data_log_2018 (cost=0.00..1.03 rows=1 width=44) (actual time=0.006..0.006 rows=1 loops=1)

Filter: ((date >= '2018-08-01 00:00:00'::timestamp without time zone) AND (date < '2018-09-01 00:00:00'::timestamp without time zone))

Planning time: 0.591 ms

Execution time: 0.041 ms

(7 rows)

Here we can see that the query planner and execution did a sequential scan on two tables, the parent and the child table for 2018. There are child tables for the years 2013 - 2020, but those other than 2018 were never accessed because the where clause has a range belonging only within 2018. The query planner ruled out all the other tables because the CHECK CONSTRAINT deems it impossible for the data to exist in those tables.

Working Partitions with Strict ORM Tools or Inserted Row Validation

As mentioned before, the example we built returns a ‘INSERT 0 0’ even though we inserted a row. If the applications inserting data into these partitioned tables rely on verifying that rows inserted is correct, these will fail. There is a fix, but it adds another layer of complexity to the partitioned table, so can be ignored if this scenario is not an issue for the applications using the partitioned table.

Using a View instead of the parent table.

The fix for this issue is to create a view that queries the parent table, and direct INSERT statements to the view. Inserting into a view may sound crazy, but that’s where the trigger on the view comes in.

severalnines=# CREATE VIEW data_log_view AS

SELECT data_log.data_log_sid,

data_log.date,

data_log.event_details

FROM data_log;

CREATE VIEW

Querying this view will look just like querying the main table, and WHERE clauses as well as JOINS will operate as expected.

View Specific Function and Trigger

Instead of using the function and trigger we defined before, they both will be slightly different. Changes in bold.

CREATE OR REPLACE FUNCTION public.insert_trigger_view()

RETURNS trigger

LANGUAGE plpgsql

AS $function$

BEGIN

IF NEW.date >= '2018-01-01' AND NEW.date < '2019-01-01' THEN

INSERT INTO part.data_log_2018 VALUES (NEW.*);

RETURN NEW;

ELSIF NEW.date >= '2019-01-01' AND NEW.date < '2020-01-01' THEN

INSERT INTO part.data_log_2019 VALUES (NEW.*);

RETURN NEW;

END IF;

END;

$function$;

severalnines=# CREATE TRIGGER insert_trigger INSTEAD OF INSERT ON data_log_view FOR EACH ROW EXECUTE PROCEDURE insert_trigger_view();

The “INSTEAD OF” definition takes over the insert command on the view (which wouldn’t work anyway), and executes the function instead. The function we defined has a very specific requirement of doing a ‘RETURN NEW;’ after the insert into the child tables is complete. Without this (or doing it like we did before with ‘RETURN NULL’) will result in ‘INSERT 0 0’ instead of ‘INSERT 0 1’ as we would expect.

Example:

severalnines=# INSERT INTO data_log_view (date, event_details) VALUES (now(), 'First insert on the view');

INSERT 0 1

severalnines=# EXPLAIN ANALYZE SELECT * FROM data_log_view WHERE date >= '2018-08-01' AND date < '2018-09-01';

QUERY PLAN

------------------------------------------------------------------------------------------------------------------------------------------------

Append (cost=0.00..2.03 rows=2 width=44) (actual time=0.015..0.017 rows=2 loops=1)

-> Seq Scan on data_log (cost=0.00..1.00 rows=1 width=44) (actual time=0.009..0.009 rows=0 loops=1)

Filter: ((date >= '2018-08-01 00:00:00'::timestamp without time zone) AND (date < '2018-09-01 00:00:00'::timestamp without time zone))

-> Seq Scan on data_log_2018 (cost=0.00..1.03 rows=1 width=44) (actual time=0.006..0.007 rows=2 loops=1)

Filter: ((date >= '2018-08-01 00:00:00'::timestamp without time zone) AND (date < '2018-09-01 00:00:00'::timestamp without time zone))

Planning time: 0.633 ms

Execution time: 0.048 ms

(7 rows)

severalnines=# SELECT * FROM data_log_view WHERE date >= '2018-08-01' AND date < '2018-09-01';

data_log_sid | date | event_details

--------------+----------------------------+--------------------------

1 | 2018-08-17 23:46:24.860634 | First insert

2 | 2018-08-18 00:13:01.126795 | First insert on the view

(2 rows)

Applications testing for inserted ‘rowcount’ to be correct will find this fix to work as expected. In this example, we appended _view to our view and stored procedure, but if the table is to be desired to be partitioned without any users knowing / application change, then we would rename the parent table to data_log_parent, and call the view by the old parent table’s name.

Updating a row and changing the partitioned column value

One thing to be aware of is that if performing an update on the data in the partitioned table, and changing the value of the column to something not allowed by the constraint will result in an error. If this type of update will never happen, then it can be ignored, but if it is a possibility, a new trigger for UPDATE processes should be written that will effectively delete the row from the old child partition, and insert a new one into the new target child partition.

Creating Future Partitions

Creating future partitions can be done in a few different ways, each with their pros and cons.

Future Partition Creator

An external program can be written up to create future partitions X time before they are needed. In a partitioning example partitioned on a date, the next needed partition to create (in our case 2019) could be set to be created sometime in December. This can be a manual script run by the Database Administrator, or set to have cron run it when needed. Yearly partitions would mean it runs once a year, however daily partitions are common, and a daily cron job makes for a happier DBA.

Automatic Partition Creator

With the power of plpgsql, we can capture errors if trying to insert data into a child partition that doesn’t exist, and on the fly create the needed partition, then try inserting again. This option works well except in the case where many different clients inserting similar data at the same time, could cause a race condition where one client creates the table, while another attempts to create the same table and gets an error of it already existing. Clever and advanced plpgsql programming can fix this, but whether or not it is worth the level of effort is up for debate. If this race condition will not happen due to the insert patterns, then there’s nothing to worry about.

Dropping Partitions

If data retention rules dictate that data is deleted after a certain amount of time, this becomes easier with partitioned tables if partitioned on a date column. If we are to delete data that’s 10 years old, it could be as simple as:

severalnines=# DROP TABLE part.data_log_2007;

DROP TABLE

This is much quicker, and more efficient than a ‘DELETE’ statement, as it doesn’t result in any dead tuples to clean up with a vacuum.

Note: If removing tables from the partition setup, code in the trigger functions should also be altered to not direct date to the dropped table.

Things to Know Before Partitioning

Partitioning tables can offer a drastic improvement to performance, but it could also make it worse. Before pushing to production servers, the partitioning strategy should be tested extensively, for data consistency, performance speed, everything. Partitioning a table has a few moving parts, they should all be tested to make sure there are zero issues.

When it comes to deciding the number of partitions, it’s highly suggested to keep the number of child tables under 1000 tables, and even lower if possible. Once the child table count gets above ~1000, performance starts to take a dive as the query planner itself ends up taking much longer just to make the query plan. It’s not unheard of to have a query plan take many seconds, while the actual execution only takes a few milliseconds. If servicing thousands of queries a minute, several seconds could bring applications to a standstill.

The plpgsql trigger stored procedures can also get complicated, and if too complicated, also slow down performance. The stored procedure is executed once for every row inserted into the table. If it ends up doing too much processing for every row, inserts could become too slow. Performance testing will make sure it’s still in acceptable range.

Get Creative

Partitioning tables in PostgreSQL can be as advanced as needed. Instead of date columns, tables can be partitioned on a ‘country’ column, with a table for each country. Partitioning can be done on multiple columns, such as both a ‘date’ and a ‘country’ column. This will make the stored procedure handling the inserts more complex, but it’s 100% possible.

Remember, the goals with partitioning are to break extremely large tables down into smaller ones, and do it in a well thought out way to allow the query planner to access the data faster than it could have in the larger original table.

Declarative Partitioning

In PostgreSQL 10 and later, a new partitioning feature ‘Declarative Partitioning’ was introduced. It’s an easier way to set up partitions, however has some limitations, If the limitations are acceptable, it will likely perform faster than the manual partition setup, but copious amounts of testing will verify that.

The official postgresql documentation has information about Declarative Partitioning and how it works. It’s new in PostgreSQL 10, and with version 11 of PostgreSQL on the horizon at the time of this writing, some of the limitations are fixed, but not all of them. As PostgreSQL evolves, Declarative Partitioning may become a full replacement for the more complex partitioning covered in this article. Until then, Declarative Partitioning may be an easier alternative if none of the limitations limit the partitioning needs.

Declarative Partitioning Limitations

The PostgreSQL documentation addresses all of the limitations with this type of partitioning in PostgreSQL 10, but a great overview can be found on The Official PostgreSQL Wiki which lists the limitations in an easier to read format, as well as noting which ones have been fixed in the upcoming PostgreSQL 11.

Ask the Community

Database Administrators all around the globe have been designing advanced and custom partitioning strategies for a long time, and many of us hang out in IRC and mailing lists. If help is needed deciding the best strategy, or just getting a bug in a stored procedure resolved, the community is here to help.

- IRC

Freenode has a very active channel called #postgres, where users help each other understand concepts, fix errors, or find other resources. - Mailing Lists

PostgreSQL has a handful of mailing lists that can be joined. Longer form questions / issues can be sent here, and can reach many more people than IRC at any given time. The lists can be found on the PostgreSQL Website, and the lists pgsql-general or pgsql-admin are good resources.

There are many organizations where front/web-facing applications use MySQL and back end processing uses PostgreSQL®. Any system integration between these applications generally involves the replication—or duplication—of data from system to system. We recently

There are many organizations where front/web-facing applications use MySQL and back end processing uses PostgreSQL®. Any system integration between these applications generally involves the replication—or duplication—of data from system to system. We recently