Introduction

Nowadays, high availability is a requirement for many systems, no matter what technology we use. This is especially important for databases, as they store data that applications rely upon. There are different ways to replicate data across multiple servers, and failover traffic when e.g. a primary server stops responding.

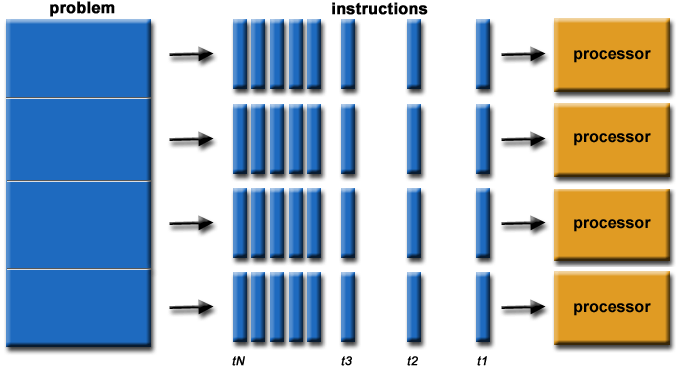

Architecture

There are several architectures for PostgreSQL high availability, but the basic ones would be master-slave and master-master architectures.

Master-Slave

This may be the most basic HA architecture we can setup, and often times, the more easy to set and maintain. It is based on one master database with one or more standby servers. These standby databases will remain synchronized (or almost synchronized) with the master, depending on whether the replication is synchronous or asynchronous. If the main server fails, the standby contains almost all of the data of the main server, and can quickly be turned into the new master database server.

We can have two categories of standby databases, based on the nature of the replication:

- Logical standbys - The replication between the master and the slaves is made via SQL statements.

- Physical standbys - The replication between the master and the slaves is made via the internal data structure modifications.

In the case of PostgreSQL, a stream of write-ahead log (WAL) records is used to keep the standby databases synchronized. This can be synchronous or asynchronous, and the entire database server is replicated.

From version 10, PostgreSQL includes a built in option to setup logical replication which is based on constructing a stream of logical data modifications from the information in the WAL. This replication method allows the data changes from individual tables to be replicated without the need of designating a master server. It also allows data to flow in multiple directions.

But a master-slave setup is not enough to effectively ensure high availability, as we also need to handle failures. To handle failures, we need to be able to detect them. Once we know there is a failure, e.g. errors on the master or the master is not responding, we can then select a slave and failover to it with the smaller delay possible. It is important that this process is as efficient as possible, in order to restore full functionality so the applications can start functioning again. PostgreSQL itself does not include an automatic failover mechanism, so that will require some custom script or third party tools for this automation.

After a failover happens, the application(s) need to be notified accordingly, so they can start using the new master. Also, we need to evaluate the state of our architecture after a failover, because we can run in a situation where we only have the new master running (i.e., we had a master and only one slave before the issue). In that case, we will need to add a slave somehow so as to re-create the master-slave setup we originally had for HA.

Master-Master architectures

This architecture provides a way of minimizing the impact of an error on one of the nodes, as the other node(s) can take care of all the traffic, maybe slightly affecting the performance, but never losing functionality. This architecture is often used with the dual purpose of not only creating an HA environment, but also to scale horizontally (as compared to the concept of vertical scalability where we add more resources to a server).

PostgreSQL does not yet support this architecture "natively", so you will have to refer to third party tools and implementations. When choosing a solution you must keep in mind that there are a lot of projects/tools, but some of them are not being supported anymore, while others are new and might not be battle-tested in production.

For further reading on HA/Clustering architectures for Postgres, please refer to this blog.

Load Balancing

Load balancers are tools that can be used to manage the traffic from your application to get the most out of your database architecture.

Not only is it useful for balancing the load of our databases, it also helps applications get redirected to the available/healthy nodes and even specify ports with different roles.

HAProxy is a load balancer that distributes traffic from one origin to one or more destinations and can define specific rules and/or protocols for this task. If any of the destinations stops responding, it is marked as offline, and the traffic is sent to the rest of the available destinations.

Keepalived is a service that allows us to configure a virtual IP within an active/passive group of servers. This virtual IP is assigned to an active server. If this server fails, the IP is automatically migrated to the “Secondary” passive server, allowing it to continue working with the same IP in a transparent way for the systems.

Let's see how to implement, using ClusterControl, a master-slave PostgreSQL cluster with load balancer servers and keepalived configured between them, all this from a friendly and easy to use interface.

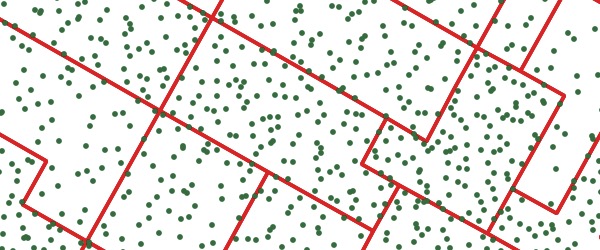

For our example we will create:

- 3 PostgreSQL servers (one master and two slaves).

- 2 HAProxy Load Balancers.

- Keepalived configured between the load balancer servers.

![Architecture diagram]()

Architecture diagram

Database deployment

To perform a deployment from ClusterControl, simply select the option “Deploy” and follow the instructions that appear.

![ClusterControl PostgreSQL Deploy 1]()

ClusterControl PostgreSQL Deploy 1

When selecting PostgreSQL, we must specify User, Key or Password and port to connect by SSH to our servers. We also need the name for our new cluster and if we want ClusterControl to install the corresponding software and configurations for us.

![ClusterControl PostgreSQL Deploy 2]()

ClusterControl PostgreSQL Deploy 2

After setting up the SSH access information, we must define the database user, version and datadir (optional). We can also specify which repository to use.

In the next step, we need to add our servers to the cluster that we are going to create.

![ClusterControl PostgreSQL Deploy 3]()

ClusterControl PostgreSQL Deploy 3

When adding our servers, we can enter IP or hostname.

In the last step, we can choose if our replication will be Synchronous or Asynchronous.

![ClusterControl PostgreSQL Deploy 4]()

ClusterControl PostgreSQL Deploy 4

We can monitor the status of the creation of our new cluster from the ClusterControl activity monitor.

![ClusterControl PostgreSQL Deploy 5]()

ClusterControl PostgreSQL Deploy 5

Once the task is finished, we can see our cluster in the main ClusterControl screen.

![ClusterControl Cluster View]()

ClusterControl Cluster View

Once we have our cluster created, we can perform several tasks on it, like adding a load balancer (HAProxy) or a new replica.

Load balancer deployment

To perform a load balancer deployment, select the option “Add Load Balancer” in the cluster actions and fill the asked information.

![ClusterControl PostgreSQL Load Balancer]()

ClusterControl PostgreSQL Load Balancer

We only need to add IP/Name, port, policy and the nodes we are going to use.

Keepalived deployment

To perform a keepalived deployment, select the cluster, go to “Manage” menu and “Load Balancer” section, and then select “Keepalived” option.

![ClusterControl PostgreSQL Keepalived]()

ClusterControl PostgreSQL Keepalived

For our HA environment, we need to select the load balancer servers and the virtual IP address.

Keepalived uses a virtual IP and migrates it from one load balancer to another in case of failure, so our setup can continue to function normally.

If we followed the previous steps, we should have the following topology:

![ClusterControl PostgreSQL Topology]()

ClusterControl PostgreSQL Topology

In the “Node” section, we can check the status and some metrics of our current servers in the cluster.

![ClusterControl PostgreSQL Nodes]()

ClusterControl PostgreSQL Nodes

PostgreSQL Management & Automation with ClusterControl

Learn about what you need to know to deploy, monitor, manage and scale PostgreSQL

ClusterControl Failover

If the “Autorecovery” option is ON, in case of master failure, ClusterControl will promote the most advanced slave (if it is not in blacklist) to master as well as notify us of the problem. It also fails over the rest of the slaves to replicate from the new master.

HAProxy is configured with two different ports, one read-write and one read-only.

In our read-write port, we have our master server as online and the rest of our nodes as offline, and in the read-only port we have both the master and the slaves online.

When HAProxy detects that one of our nodes, either master or slave, is not accessible, it automatically marks it as offline and does not take it into account for sending traffic to it. Detection is done by healthcheck scripts that are configured by ClusterControl at time of deployment. These check whether the instances are up, whether they are undergoing recovery, or are read-only.

When ClusterControl promotes a slave to master, our HAProxy marks the old master as offline (for both ports) and puts the promoted node online (in the read-write port).

If our active HAProxy, which is assigned a Virtual IP address to which our systems connect, fails, Keepalived migrates this IP to our passive HAProxy automatically. This means that our systems are then able to continue to function normally.

In this way, our systems continue to operate normally and without our intervention.

Considerations

If we manage to recover our old failed master, it will NOT be re-introduced automatically to the cluster. We need to do it manually. One reason for this is that, if our replica was delayed at the time of the failure, if we add the old master to the cluster, it would mean loss of information or inconsistency of data across nodes. We might also want to analyze the issue in detail. If we just re-introduced the failed node into the cluster, we would possibly lose diagnostic information.

Also, if failover fails, no further attempts are made. Manual intervention is required to analyze the problem and perform the corresponding actions. This is to avoid the situation where ClusterControl, as the high availability manager, tries to promote the next slave and the next one. There might be a problem and we need to check this.

Security

One important thing we cannot forget before going into prod with our HA environment is to ensure the security of it.

There are several security aspects such as encryption, role management and access restriction by IP address. These topics were seen in depth in a previous blog, so we will only point them out in this blog.

In our PostgreSQL database, we have the pg_hba.conf file which handles the client authentication. We can limit the type of connection, the source IP or network, which database we can connect to and with which users. Therefore, this file is a very important piece for our security.

We can configure our PostgreSQL database from the postgresql.conf file, so it only listens on a specific network interface, and on a different port that the default port (5432), thus avoiding basic connection attempts from unwanted sources.

A correct user management, either using secure passwords or limiting access and privileges, is also an important piece of the security settings. It is recommended to assign the minimum amount of privileges possible to users, as well as to specify, if possible, the source of the connection.

We can also enable data encryption, either in transit or at rest, avoiding access to information to unauthorized persons.

The audit is important to know what happens or happened in our database. PostgreSQL allows you to configure several parameters for logging or even use the pgAudit extension for this task.

Last but not least, it is recommended to keep our database and servers up to date with the latest patches, to avoid security risks. For this ClusterControl gives us the possibility to generate operational reports to verify if we have update availables, and even help us to update our database.

Conclusion

In this blog we have reviewed some concepts regarding HA. We went through some possible architectures and the necessary components to set them effectively.

After that we explained how ClusterControl makes use of these components to deploy a complete HA environment for PostgreSQL.

And finally we reviewed some important security aspects to take into account before going live.