Liaqat Andrabi: Webinar: Postgres Vacuuming Through Pictures [Follow up]

Johann Oskarsson: Running Pgbadger Automatically with Zsh

Here I present a simple script that queries the database for the log file locations and automatically chooses the one for yesterday. The trick here is to apply strftime to the log filename as configured in the database. This way, it doesn’t matter how complex the log file name is in the database, it’s alawys easy to guess. All this works as long as the system’s strftime and PostgreSQL’s log file escapes are equivalent; this should never be an issue.

There are some limits to the current script. It assumes log_directory is to be found within data_directory and is not an absolute path. It also assumes there are no strftime escape sequences in the directory names themselves. Fixing either or both of these is not hard if a more general script is needed. It is also hardcoded to choose the csv log file, but this is easy to change.

Finally it runs pgbadger on the log file from yesterday, and outputs html in the webserver’s directory. The assumption here is that PostgreSQL will write its log into a new file every day, possibly in a rotating sequence.

The script is mean to be called every day with cron.

#!/usr/local/bin/zsh

zmodload zsh/datetime

datadir=`/usr/local/bin/psql -A -t -q -w -c "show data_directory" ;`

logdir=`/usr/local/bin/psql -A -t -q -w -c "show log_directory" ;`

filename=`/usr/local/bin/psql -A -t -q -w -c "show log_filename" ;`

strftime -s log $datadir/$logdir/$filename $(( $epochtime[ 1 ] - 24 * 60 * 60 ))

/usr/local/bin/pgbadger -q -I -O /var/www/html/pgbadger/ -o index.html ${log:r}.csv

Thom Brown: jsquery vs SQL/JSON

jsquery was introduced as a non-core extension for PostgreSQL 9.4 and higher by Teodor Sigaev, Alexander Korotkov and Oleg Bartunov. Like jsonpath, it also used its own datatype, jsquery.

We have some functions to which we can pass jsonpath expressions to, 2 of which have operators which can be used as shorthand (albeit without additional parameter control, as they exist primarily for indexing purposes):

| Function | Operator | Description |

|---|---|---|

jsonb_path_exists | @? | This will return true if it matched something, false if not, or null if it resulted in an operation on a missing value. |

jsonb_path_match | @@ | This does the same thing as jsonb_path_exists, but only first result item is tested. |

jsonb_path_query | None | Returns the JSON data resulting from the jsonpath expression. |

jsonb_path_query_array | None | Same as jsonb_path_query, but puts the result in a JSON array. |

jsonb_path_query_first | None | Same as jsonb_path_query, but only selects the first value. |

These will suppress errors where there's a lack of an array element, object field, an unexpected JSON type or numeric errors.

Here are some examples of how jsquery and SQL/JSON differ in practice. (Note that jsquery usage requires installation of the jsquery extension):

We will select all elements from an array which are equal to 1. Note that, here, jsquery returns an array containing 1, whereas jsonpath returns a scalar value of 1.

jsquery

SELECT '[1,2,3]'::jsonb ~~ '#. ?($ = 1).$'::jsquery;

jsonpath

SELECT jsonb_path_query('[1,2,3]'::jsonb, '$[*] ? (@ == 1)');Now we'll check that all elements are greater than 1 and less than 5.

jsquery

SELECT '[2,3,4]' @@ '#: ($ > 1 and $ < 5)'::jsquery;

jsonpath

SELECT '[2,3,4]' @? '$[*] ? (@ > 1 && @ < 5)';

And here we have some jsonb data as follows:

CREATE TABLE books (data jsonb);

INSERT INTO books (data) VALUES ('[{"author": "Charles Dickens", "book": "A Tale of Two Cities"},

{"author": "William Shakespeare", "book": "Hamlet"}]');

We want books by William Shakespeare.

jsquery

SELECT data ~~ '#. ? ($.author = "William Shakespeare")' FROM books;

jsonpath

SELECT jsonb_path_query(data,'$[*] ? (@.author == "William Shakespeare")') FROM books;

You can see that, while they share some characteristics, they are not the same. It's also possible to control whether it uses lax or strict rules which determine whether or not to throw an error if referencing a non-existing object member or a structural issue. "lax" suppresses such errors, "strict" doesn't. These are placed at the beginning of the jsonpath expression.

So we end up with the following syntax for jsonpath:

[lax|strict] <path expression> ? <filter expression>

I've put together some comparisons between jsquery and jsonpath expressions.

| - | Achievable using other jsonpath operators. |

| * | No jsonpath equivalent, but usage available at the SQL level. |

| x | No equivalent. |

| jsquery | jsonpath | Description |

|---|---|---|

$ | $ | The whole document |

. | . | Accessor |

* | * | All values at the current level |

| x | ** | All values at all levels |

#N | $[N] | Nth value of an array starting at 0 |

| x | $[start,end] | Slice of an array |

# | - | All array elements |

% | - | All object keys |

| jsquery | jsonpath | Description |

|---|---|---|

| x | + (unary) | Plus operation on a sequence |

| x | - (unary) | Minus operation on sequence |

| x | + (binary) | Addition |

| x | - (binary) | Subtraction |

| x | * | Multiplication |

| x | / | Division |

IS <type> | type() | Checks the type (jsquery) or returns the type name (jsonpath) |

@# | size() | Size (length) of an array |

| x | double() | Numeric value from string |

| x | ceiling() | Nearest integer greater than or equal to value |

| x | floor() | Nearest integer less than or equal to value |

| x | abs() | Absolute value of number |

| x | keyvalue() | Object represented as sequence of key, value and id fields |

| jsquery | jsonpath | Description |

|---|---|---|

= | == | Equality |

< | < | Less than |

<= | <= | Less than or equal to |

> | > | Greater than |

>= | >= | Greater than or equal to |

@> | * | Contains |

<@ | * | Contained by |

IN | - | Search within a list of scalar values |

&& | * | Overlap |

AND | && | Boolean AND |

OR | || | Boolean OR |

NOT | ! | Boolean NOT |

=* | exists | Expression contains 1 or more items |

starts with | Value begins with specified value | |

| x | like_regex | Test string against regex pattern |

| jsquery | jsonpath |

|---|---|

true | true |

false | false |

null | null |

| x | is unknown |

Note that, unlike SQL expressions, you can use the equality operator with "null", whereas you would usually have to state IS NULL. This is because it's not directly equivalent.

jsquery features not present in SQL/JSON.

jsquery supports index hints, but this was necessary as the optimiser has no knowledge of the contents of jsquery strings or statistics related to individual values. So this feature is effectively redundant when it comes to SQL/JSON.Limitations

Only text, numeric and boolean types are supported at present. Datetime is still a work in progress, so these are intended to be supported in JSONB in future.For more information on the jsonpath and SQL/JSON, see the PostgreSQL documentation:

SQL/JSON Path Expressions

jsonpath Type

Paul Ramsey: Waiting for PostGIS 3: Hilbert Geometry Sorting

With the release of PostGIS 3.0, queries that ORDER BY geometry columns will return rows using a Hilbert curve ordering, and do so about twice as fast.

Whuuuut!?!

The history of "ordering by geometry" in PostGIS is mostly pretty bad. Up until version 2.4 (2017), if you did ORDER BY on a geometry column, your rows would be returned using the ordering of the minimum X coordinate value in the geometry.

One of the things users expect of "ordering" is that items that are "close" to each other in the ordered list should also be "close" to each other in the domain of the values. For example, in a set of sales orders ordered by price, rows next to each other have similar prices.

To visualize what geometry ordering looks like, I started with a field of 10,000 random points.

Álvaro Hernández: Benchmarking: Do it with transparency or don't do it at all

Sebastian Insausti: Big Data with PostgreSQL and Apache Spark

PostgreSQL is well known as the most advanced opensource database, and it helps you to manage your data no matter how big, small or different the dataset is, so you can use it to manage or analyze your big data, and of course, there are several ways to make this possible, e.g Apache Spark. In this blog, we’ll see what Apache Spark is and how we can use it to work with our PostgreSQL database.

For big data analytics, we have two different types of analytics:

- Batch analytics: Based on the data collected over a period of time.

- Real-time (stream) analytics: Based on an immediate data for an instant result.

What is Apache Spark?

Apache Spark is a unified analytics engine for large-scale data processing that can work on both batch and real-time analytics in a faster and easier way.

It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs.

Apache Spark Libraries

Apache Spark includes different libraries:

- Spark SQL: It’s a module for working with structured data using SQL or a DataFrame API. It provides a common way to access a variety of data sources, including Hive, Avro, Parquet, ORC, JSON, and JDBC. You can even join data across these sources.

- Spark Streaming: It makes easy to build scalable fault-tolerant streaming applications using a language-integrated API to stream processing, letting you write streaming jobs the same way you write batch jobs. It supports Java, Scala and Python. Spark Streaming recovers both lost work and operator state out of the box, without any extra code on your part. It lets you reuse the same code for batch processing, join streams against historical data, or run ad-hoc queries on stream state.

- MLib (Machine Learning): It’s a scalable machine learning library. MLlib contains high-quality algorithms that leverage iteration and can yield better results than the one-pass approximations sometimes used on MapReduce.

- GraphX: It’s an API for graphs and graph-parallel computation. GraphX unifies ETL, exploratory analysis, and iterative graph computation within a single system. You can view the same data as both graphs and collections, transform and join graphs with RDDs efficiently, and write custom iterative graph algorithms using the Pregel API.

Apache Spark Advantages

According to the official documentation, some advantages of Apache Spark are:

- Speed: Run workloads 100x faster. Apache Spark achieves high performance for both batch and streaming data, using a state-of-the-art DAG (Direct Acyclic Graph) scheduler, a query optimizer, and a physical execution engine.

- Ease of Use: Write applications quickly in Java, Scala, Python, R, and SQL. Spark offers over 80 high-level operators that make it easy to build parallel apps. You can use it interactively from the Scala, Python, R, and SQL shells.

- Generality: Combine SQL, streaming, and complex analytics. Spark powers a stack of libraries including SQL and DataFrames, MLlib for machine learning, GraphX, and Spark Streaming. You can combine these libraries seamlessly in the same application.

- Runs Everywhere: Spark runs on Hadoop, Apache Mesos, Kubernetes, standalone, or in the cloud. It can access diverse data sources. You can run Spark using its standalone cluster mode, on EC2, on Hadoop YARN, on Mesos, or on Kubernetes. Access data in HDFS, Alluxio, Apache Cassandra, Apache HBase, Apache Hive, and hundreds of other data sources.

Now, let’s see how we can integrate this with our PostgreSQL database.

How to Use Apache Spark with PostgreSQL

We’ll assume you have your PostgreSQL cluster up and running. For this task, we’ll use a PostgreSQL 11 server running on CentOS7.

First, let’s create our testing database on our PostgreSQL server:

postgres=# CREATE DATABASE testing;

CREATE DATABASE

postgres=# \c testing

You are now connected to database "testing" as user "postgres".Now, we’re going to create a table called t1:

testing=# CREATE TABLE t1 (id int, name text);

CREATE TABLEAnd insert some data there:

testing=# INSERT INTO t1 VALUES (1,'name1');

INSERT 0 1

testing=# INSERT INTO t1 VALUES (2,'name2');

INSERT 0 1Check the data created:

testing=# SELECT * FROM t1;

id | name

----+-------

1 | name1

2 | name2

(2 rows)To connect Apache Spark to our PostgreSQL database, we’ll use a JDBC connector. You can download it from here.

$ wget https://jdbc.postgresql.org/download/postgresql-42.2.6.jarNow, let’s install Apache Spark. For this, we need to download the spark packages from here.

$ wget http://us.mirrors.quenda.co/apache/spark/spark-2.4.3/spark-2.4.3-bin-hadoop2.7.tgz

$ tar zxvf spark-2.4.3-bin-hadoop2.7.tgz

$ cd spark-2.4.3-bin-hadoop2.7/To run the Spark shell we’ll need JAVA installed on our server:

$ yum install javaSo now, we can run our Spark Shell:

$ ./bin/spark-shell

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://ApacheSpark1:4040

Spark context available as 'sc' (master = local[*], app id = local-1563907528854).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.3

/_/

Using Scala version 2.11.12 (OpenJDK 64-Bit Server VM, Java 1.8.0_212)

Type in expressions to have them evaluated.

Type :help for more information.

scala>We can access our Spark context Web UI available in the port 4040 on our server:

Into the Spark shell, we need to add the PostgreSQL JDBC driver:

scala> :require /path/to/postgresql-42.2.6.jar

Added '/path/to/postgresql-42.2.6.jar' to classpath.

scala> import java.util.Properties

import java.util.PropertiesAnd add the JDBC information to be used by Spark:

scala> val url = "jdbc:postgresql://localhost:5432/testing"

url: String = jdbc:postgresql://localhost:5432/testing

scala> val connectionProperties = new Properties()

connectionProperties: java.util.Properties = {}

scala> connectionProperties.setProperty("Driver", "org.postgresql.Driver")

res6: Object = nullNow, we can execute SQL queries. First, let’s define query1 as SELECT * FROM t1, our testing table.

scala> val query1 = "(SELECT * FROM t1) as q1"

query1: String = (SELECT * FROM t1) as q1And create the DataFrame:

scala> val query1df = spark.read.jdbc(url, query1, connectionProperties)

query1df: org.apache.spark.sql.DataFrame = [id: int, name: string]

So now, we can perform an action over this DataFrame:

scala> query1df.show()

+---+-----+

| id| name|

+---+-----+

| 1|name1|

| 2|name2|

+---+-----+scala> query1df.explain

== Physical Plan ==

*(1) Scan JDBCRelation((SELECT * FROM t1) as q1) [numPartitions=1] [id#19,name#20] PushedFilters: [], ReadSchema: struct<id:int,name:string>We can add more values and run it again just to confirm that it’s returning the current values.

PostgreSQL

testing=# INSERT INTO t1 VALUES (10,'name10'), (11,'name11'), (12,'name12'), (13,'name13'), (14,'name14'), (15,'name15');

INSERT 0 6

testing=# SELECT * FROM t1;

id | name

----+--------

1 | name1

2 | name2

10 | name10

11 | name11

12 | name12

13 | name13

14 | name14

15 | name15

(8 rows)Spark

scala> query1df.show()

+---+------+

| id| name|

+---+------+

| 1| name1|

| 2| name2|

| 10|name10|

| 11|name11|

| 12|name12|

| 13|name13|

| 14|name14|

| 15|name15|

+---+------+In our example, we’re showing only how Apache Spark works with our PostgreSQL database, not how it manages our Big Data information.

Conclusion

Nowadays, it’s pretty common to have the challenge to manage big data in a company, and as we could see, we can use Apache Spark to cope with it and make use of all the features that we mentioned earlier. The big data is a huge world, so you can check the official documentation for more information about the usage of Apache Spark and PostgreSQL and fit it to your requirements.

Michael Paquier: Postgres 12 highlight - Replication slot copy

Replication slots can be used in streaming replication, with physical replication slots, and logical decoding, with logical replication slots, to retain WAL in a more precise way than wal_keep_segments so as past WAL segments are removed at checkpoint using the WAL position a client consuming the slot sees fit. A feature related to replication slots has been committed to PostgreSQL 12:

commit: 9f06d79ef831ffa333f908f6d3debdb654292414

author: Alvaro Herrera <alvherre@alvh.no-ip.org>

date: Fri, 5 Apr 2019 14:52:45 -0300

Add facility to copy replication slots

This allows the user to create duplicates of existing replication slots,

either logical or physical, and even changing properties such as whether

they are temporary or the output plugin used.

There are multiple uses for this, such as initializing multiple replicas

using the slot for one base backup; when doing investigation of logical

replication issues; and to select a different output plugins.

Author: Masahiko Sawada

Reviewed-by: Michael Paquier, Andres Freund, Petr Jelinek

Discussion: https://postgr.es/m/CAD21AoAm7XX8y_tOPP6j4Nzzch12FvA1wPqiO690RCk+uYVstg@mail.gmail.com

This introduces two new SQL functions adapted for each slot type:

- pg_copy_logical_replication_slot

- pg_copy_physical_replication_slot

By default pg_basebackup uses a temporary replication slot to make sure that while transferring the data of the main data folder the WAL segments necessary for recovery from the beginning to the end of the backup are transferred properly, and that the backup does not fail in the middle of processing. In this case the slot is called pg_basebackup_N where N is the PID of the backend process running the replication connection. However there are cases where it makes sense to not use a temporary slot but a permanent one, particularly when reusing a base backup as a standby with no WAL archiving around, so as it is possible to keep WAL around for longer without having a primary’s checkpoint interfere with the recycling of WAL segments. One major take of course with replication slots is that they require a closer monitoring of the local pg_wal/ folder, as if its partition gets full PostgreSQL would immediately stop.

In the case of a physical slot, a copy is useful when creating multiple standbys from the same base backup. As a replication slot can only be consumed by one slot, it reduces the portability of a given base backup, however it is possible to do the following:

- Complete a base backup with pg_basebackup –slot using a permanent slot.

- Create one or more copies of the original slot.

- Use each slot for one standby, which release WAL at their own pace.

Another property of the copy functions is that it is possible to switch a physical slot from temporary to permanent and vice-versa. Here is for example how to create a slot from a permanent one (controlled by the third argument of the function) which retains WAL immediately (controlled by the second argument). The copy of the slot will mark the restart_lsn of the origin slot to be the same as the target:

=# SELECT * FROM pg_create_physical_replication_slot('physical_slot_1', true, false);

slot_name | lsn

-----------------+-----------

physical_slot_1 | 0/15F2A58

(1 row)

=# select * FROM pg_copy_physical_replication_slot('physical_slot_1', 'physical_slot_2');

slot_name | lsn

-----------------+------

physical_slot_2 | null

(1 row)

=# SELECT slot_name, restart_lsn FROM pg_replication_slots;

slot_name | restart_lsn

-----------------+-------------

physical_slot_1 | 0/15CF098

physical_slot_2 | 0/15CF098

(2 rows)

Note that it is not possible to copy a physical slot to become a logical one, but that a slot can become temporary after being copied from a permanent one, and that the copied temporary slot will be associated to the session doing the copy:

=# SELECT pg_copy_logical_replication_slot('physical_slot_1', 'logical_slot_2');

ERROR: 0A000: cannot copy logical replication slot "physical_slot_1" as a physical replication slot

LOCATION: copy_replication_slot, slotfuncs.c:673

=# SELECT * FROM pg_copy_physical_replication_slot('physical_slot_1', 'physical_slot_temp', true);

slot_name | lsn

--------------------+------

physical_slot_temp | null

(1 row)

=# SELECT slot_name, temporary, restart_lsn FROM pg_replication_slots;

slot_name | temporary | restart_lsn

--------------------+-----------+-------------

physical_slot_1 | f | 0/15CF098

physical_slot_2 | f | 0/15CF098

physical_slot_temp | t | 0/15CF098

(3 rows)

The copy of logical slots also has many usages. As logical replication makes use of a slot on the publication side which is then consumed by a subscription, this makes the debugging of such configurations easier, particularly if there is a conflict of some kind on the target server. The most interesting property is that it is possible to change two properties of a slot when copying it:

- Change a slot from being permanent or temporary.

- More importantly, change the output plugin of a slot.

In the context of logical replication, the output plugin being used is pgoutput, and here is how to copy a logical slot with a new, different plugin. At creation the third argument controls if a slot is temporary or not:

=# SELECT * FROM pg_create_logical_replication_slot('logical_slot_1', 'pgoutput', false);

slot_name | lsn

----------------+-----------

logical_slot_1 | 0/15CF7C0

(1 row)

=# SELECT * FROM pg_copy_logical_replication_slot('logical_slot_1', 'logical_slot_2', false, 'test_decoding');

slot_name | lsn

----------------+-----------

logical_slot_2 | 0/15CF7C0

(1 row)

=# SELECT slot_name, restart_lsn, plugin FROM pg_replication_slots

WHERE slot_type = 'logical';

slot_name | restart_lsn | plugin

----------------+-------------+---------------

logical_slot_1 | 0/15CF788 | pgoutput

logical_slot_2 | 0/15CF788 | test_decoding

(2 rows)

And then the secondary slot can be looked at with more understandable data as it prints text records of logical changes happening. This can be consumed with the SQL functions like pg_logical_slot_get_changes as well as a client like pg_recvlogical.

Hans-Juergen Schoenig: Combined indexes vs. separate indexes in PostgreSQL

A “composite index”, also known as “concatenated index”, is an index on multiple columns in a table. Many people are wondering, what is more beneficial: Using separate or using composite indexes? Whenever we do training, consulting or support this question is high up on the agenda and many people keep asking this question. Therefore, I decided to shed some light on this question.

Which indexes shall one create?

To discuss the topic on a more practical level, I created a table consisting of three columns. Then I loaded 1 million rows and added a composite index covering all three columns:

test=# CREATE TABLE t_data (a int, b int, c int); CREATE TABLE test=# INSERT INTO t_data SELECT random()*100000, random()*100000, random()*100000 FROM generate_series(1, 1000000); INSERT 0 1000000 test=# CREATE INDEX idx_data ON t_data(a, b, c); CREATE INDEX

The layout of the table is therefore as follows:

test=# \d t_data

Table "public.t_data"

Column | Type | Collation | Nullable | Default

--------+---------+-----------+----------+---------

a | integer | | |

b | integer | | |

c | integer | | |

Indexes:

"idx_data" btree (a, b, c)

Let us run ANALYZE now to ensure that optimizer statistics are there. Usually autovacuum will kick in and create statistics for your table, but to make sure running ANALYZE does not hurt in this case.

test=# ANALYZE t_data; ANALYZE

PostgreSQL will rearrange filters for you

The first important thing to observe is that PostgreSQL will try to arrange the filters in your query for you. The following query will filter on all indexed columns:

test=# explain SELECT *

FROM t_data

WHERE c = 10

AND b = 20

AND a = 10;

QUERY PLAN

---------------------------------------------------

Index Only Scan using idx_data on t_data

(cost=0.42..4.45 rows=1 width=12)

Index Cond: ((a = 10) AND (b = 20) AND (c = 10))

(2 rows)

As you can see we filtered for c, b, a but the optimizer changed the order of those conditions and turned it into a, b, c to make sure that the index we created suits the query. There are some important things to learn here:

- The order of the conditions in your WHERE clause makes no difference

- PostgreSQL will find the right indexes automatically

Also, keep in mind that PostgreSQL knows that equality is transitive and can infer conditions from that:

test=# explain SELECT *

FROM t_data

WHERE c = 10

AND b = a

AND a = c;

QUERY PLAN

---------------------------------------------------

Index Only Scan using idx_data on t_data

(cost=0.42..4.45 rows=1 width=12)

Index Cond: ((a = 10) AND (b = 10) AND (c = 10))

(2 rows)

What you can see here is that PostgreSQL figured out automatically that a, b and c are actually the same.

Using parts of an index

However, if you have a composite index it is not necessary to filter on all columns. It is also ok to use the first or the first and second field to filter on. Here is an example:

test=# explain SELECT *

FROM t_data

WHERE a = 10;

QUERY PLAN

------------------------------------------

Index Only Scan using idx_data on t_data

(cost=0.42..4.62 rows=11 width=12)

Index Cond: (a = 10)

(2 rows)

As you can see PostgreSQL can still use the same index. An index is simple a sorted list, which happens to be ordered by three fields. In multi-column indexes, this ordering is a so-called &ldauo;lexicographical ordering”: the rows are first sorted by the first index column. Rows with the same first column are sorted by the second column, and so on. It is perfectly fine to just make use of the first columns. Talking about sorted lists:

test=# explain SELECT *

FROM t_data

WHERE a = 10

ORDER BY b, c;

QUERY PLAN

-------------------------------------------

Index Only Scan using idx_data on t_data

(cost=0.42..4.62 rows=11 width=12)

Index Cond: (a = 10)

(2 rows)

An index can also provide you with sorted data. In this case we filter on a and order by the remaining two columns b and c.

When composite indexes don’t work

Let us try to understand, when a composite index does not help to speed up a query. Here is an example:

test=# explain SELECT *

FROM t_data

WHERE b = 10;

QUERY PLAN

---------------------------------------------------

Gather (cost=1000.00..11615.43 rows=11 width=12)

Workers Planned: 2

-> Parallel Seq Scan on t_data

(cost=0.00..10614.33 rows=5 width=12)

Filter: (b = 10)

(4 rows)

In this case we are filtering on the second column. Remember: A btree index is nothing more than a sorted list. In our case it is sorted by a, b, c. So naturally we cannot usefully filter on this field. However, in some rare cases it can happen that you will still see an index scan. Some people see that as proof “that it does indeed work”. But, you will see an index scan for the wrong reason: PostgreSQL is able to read an index completely – not to filter but to reduce the amount of I/O it takes to read a table. If a table has many columns it might be faster to read the index than to digest the table.

Let us simulate this:

test=# SET seq_page_cost TO 10000; SET

By telling PostgreSQL that sequential I/O is more expensive than it really is you will see that the optimizer turns to an index scan:

test=# explain analyze

SELECT *

FROM t_data

WHERE b = 10;

QUERY PLAN

--------------------------------------------------

Index Only Scan using idx_data on t_data

(cost=0.42..22892.53 rows=11 width=12)

(actual time=7.626..63.087 rows=8 loops=1)

Index Cond: (b = 10)

Heap Fetches: 0

Planning Time: 0.121 ms

Execution Time: 63.122 ms

(5 rows)

However, the execution time is 63 milliseconds, which is A LOT more than if we had done this for the first column in the index.

Note that “Index Cond: (b = 10)” means something different here than in the previous examples: while before we had index scan conditions, here we have an index filter condition. It is not ideal that the two look the same in the EXPLAIN output.

Understanding bitmap index scans in PostgreSQL

PostgreSQL is able to use more than one index at the same time. This is especially important if you are using OR as shown in the next example:

test=# SET seq_page_cost TO default;

SET

test=# explain SELECT *

FROM t_data

WHERE a = 4

OR a = 23232;

QUERY PLAN

----------------------------------------------------

Bitmap Heap Scan on t_data

(cost=9.03..93.15 rows=22 width=12)

Recheck Cond: ((a = 4) OR (a = 23232))

-> BitmapOr (cost=9.03..9.03 rows=22 width=0)

-> Bitmap Index Scan on idx_data

(cost=0.00..4.51 rows=11 width=0)

Index Cond: (a = 4)

-> Bitmap Index Scan on idx_data

(cost=0.00..4.51 rows=11 width=0)

Index Cond: (a = 23232)

(7 rows)

What PostgreSQL will do is to decide on a bitmap scan. The idea is to first consult all the indexes to compile a list of rows / blocks, which then have to be fetched from the table (= heap). This is usually a lot better than a sequential scan. In my example the same index is even used twice. However, in real life you might see bitmap scans involving various indexes more often.

Using a subset of indexes in a single SQL statement

In many cases using too many indexes can even be counterproductive. The planner will make sure that enough indexes are chosen but it won’t take too many. Let us take a look at the following example:

test=# DROP INDEX idx_data;

DROP INDEX

test=# CREATE INDEX idx_a ON t_data (a);

CREATE INDEX

test=# CREATE INDEX idx_a ON t_data (b);

CREATE INDEX

test=# CREATE INDEX idx_a ON t_data (c);

CREATE INDEX

test=# \d t_data

Table "public.t_data"

Column | Type | Collation | Nullable | Default

--------+---------+-----------+----------+---------

a | integer | | |

b | integer | | |

c | integer | | |

Indexes:

"idx_a" btree (a)

"idx_b" btree (b)

"idx_c" btree (c)

The following query filters on all columns again. This time I did not use a single combined index but decided on separate indexes, which is usually more inefficient. Here is what happens:

test=# explain SELECT *

FROM t_data

WHERE a = 10

AND b = 20

AND c = 30;

QUERY PLAN

----------------------------------------------------

Bitmap Heap Scan on t_data

(cost=9.27..13.28 rows=1 width=12)

Recheck Cond: ((c = 30) AND (b = 20))

Filter: (a = 10)

-> BitmapAnd (cost=9.27..9.27 rows=1 width=0)

-> Bitmap Index Scan on idx_c

(cost=0.00..4.51 rows=11 width=0)

Index Cond: (c = 30)

-> Bitmap Index Scan on idx_b

(cost=0.00..4.51 rows=11 width=0)

Index Cond: (b = 20)

(8 rows)

Let us take a closer look at the plan. The query filters on 3 columns BUT PostgreSQL only decided on two indexes. Why is that the case? We have imported 1 million rows. Each column contains 100.000 distinct values, which means that every value occurs 10 times. What is the point in fetching a couple of rows from every index even if two indexes already narrow down the result sufficiently enough? This is exactly what happens.

Optimizing min / max in SQL queries

Indexes are not only about filtering. It will also help you to mind the lowest and the highest value in a column as shown by the following SQL statement:

test=# explain SELECT min(a), max(b) FROM t_data;

QUERY PLAN

-----------------------------------------------------------------------

Result (cost=0.91..0.92 rows=1 width=8)

InitPlan 1 (returns $0)

-> Limit (cost=0.42..0.45 rows=1 width=4)

-> Index Only Scan using idx_a on t_data

(cost=0.42..28496.42 rows=1000000 width=4)

Index Cond: (a IS NOT NULL)

InitPlan 2 (returns $1)

-> Limit (cost=0.42..0.45 rows=1 width=4)

-> Index Only Scan Backward using idx_b on t_data t_data_1

(cost=0.42..28496.42 rows=1000000 width=4)

Index Cond: (b IS NOT NULL)

(9 rows)

As you can see PostgreSQL is already pretty sophisticated. If you are looking for good performance it certainly makes sense to see, how PostgreSQL handles indexes and review your code in order to speed thing up. If you want to learn more about performance, consider checking out Laurenzs Albe’s post about speeding up count(*). Also, if you are not sure, why your database is slow, check out my post on PostgreSQL database performance, which explains, how to find slow queries.

The post Combined indexes vs. separate indexes in PostgreSQL appeared first on Cybertec.

Paul Ramsey: PostGIS Overlays

One question that comes up often during our PostGIS training is “how do I do an overlay?” The terminology can vary: sometimes they call the operation a “union” sometimes an “intersect”. What they mean is, “can you turn a collection of overlapping polygons into a collection of non-overlapping polygons that retain information about the overlapping polygons that formed them?”

So an overlapping set of three circles becomes a non-overlapping set of 7 polygons.

Calculating the overlapping parts of a pair of shapes is easy, using the ST_Intersection() function in PostGIS, but that only works for pairs, and doesn’t capture the areas that have no overlaps at all.

How can we handle multiple overlaps and get out a polygon set that covers 100% of the area of the input sets? By taking the polygon geometry apart into lines, and then building new polygons back up.

Let’s construct a synthetic example: first, generate a collection of random points, using a Gaussian distribution, so there’s more overlap in the middle. The crazy math in the SQL below just converts the uniform random numbers from the random() function into normally distributed numbers.

CREATETABLEptsASWITHrandsAS(SELECTgenerate_seriesasid,random()ASu1,random()ASu2FROMgenerate_series(1,100))SELECTid,ST_SetSRID(ST_MakePoint(50*sqrt(-2*ln(u1))*cos(2*pi()*u2),50*sqrt(-2*ln(u1))*sin(2*pi()*u2)),4326)ASgeomFROMrands;The result looks like this:

Now, we turn the points into circles, big enough to have overlaps.

CREATETABLEcirclesASSELECTid,ST_Buffer(geom,10)ASgeomFROMpts;Which looks like this:

Now it’s time to take the polygons apart. In this case we’ll take the exterior ring of the circles, using ST_ExteriorRing(). If we were dealing with complex polygons with holes, we’d have to use ST_DumpRings(). Once we have the rings, we want to make sure that everywhere rings cross the lines are broken, so that no lines cross, they only touch at their end points. We do that with the ST_Union() function.

CREATETABLEboundariesASSELECTST_Union(ST_ExteriorRing(geom))ASgeomFROMcircles;What comes out is just lines, but with end points at every crossing.

Now that we have noded lines, we can collect them into a multi-linestring and feed them to ST_Polygonize() to generate polygons. The polygons come out as one big multi-polygon, so we’ll use ST_Dump() to convert it into a table with one row per polygon.

CREATESEQUENCEpolyseq;CREATETABLEpolysASSELECTnextval('polyseq')ASid,(ST_Dump(ST_Polygonize(geom))).geomASgeomFROMboundaries;Now we have a set of polygons with no overlaps, only one polygon per area.

So, how do we figure out how many overlaps contributed to each incoming polygon? We can join the centroids of the new small polygons with the set of original circles, and calculate how many circles contain each centroid point.

A spatial join will allow us to calculate the number of overlaps.

ALTERTABLEpolysADDCOLUMNcountINTEGERDEFAULT0;UPDATEPOLYSsetcount=p.countFROM(SELECTcount(*)AScount,p.idASidFROMpolyspJOINcirclescONST_Contains(c.geom,ST_PointOnSurface(p.geom))GROUPBYp.id)ASpWHEREp.id=polys.id;That’s it! Now we have a single coverage of the area, where each polygon knows how much overlap contributed to it. Ironically, when visualized using the coverage count as a variable in the color ramp, it looks a lot like the original image, which was created with a simple transparency effect. However, the point here is that we’ve created new data, in the count attribute of the new polygon layer.

The same decompose-and-rebuild-and-join-centroids trick can be used to overlay all kinds of features, and to carry over attributes from the original input data, achieving the classic “GIS overlay” workflow. Happy geometry mashing!

Ibrar Ahmed: Parallelism in PostgreSQL

PostgreSQL is one of the finest object-relational databases, and its architecture is process-based instead of thread-based. While almost all the current database systems utilize threads for parallelism, PostgreSQL’s process-based architecture was implemented prior to POSIX threads. PostgreSQL launches a process “postmaster” on startup, and after that spans new process whenever a new client connects to the PostgreSQL.

PostgreSQL is one of the finest object-relational databases, and its architecture is process-based instead of thread-based. While almost all the current database systems utilize threads for parallelism, PostgreSQL’s process-based architecture was implemented prior to POSIX threads. PostgreSQL launches a process “postmaster” on startup, and after that spans new process whenever a new client connects to the PostgreSQL.

Before version 10 there was no parallelism in a single connection. It is true that multiple queries from the different clients can have parallelism because of process architecture, but they couldn’t gain any performance benefit from one another. In other words, a single query runs serially and did not have parallelism. This is a huge limitation because a single query cannot utilize the multi-core. Parallelism in PostgreSQL was introduced from version 9.6. Parallelism, in a sense, is where a single process can have multiple threads to query the system and utilize the multicore in a system. This gives PostgreSQL intra-query parallelism.

Parallelism in PostgreSQL was implemented as part of multiple features which cover sequential scans, aggregates, and joins.

Components of Parallelism in PostgreSQL

There are three important components of parallelism in PostgreSQL. These are the process itself, gather, and workers. Without parallelism the process itself handles all the data, however, when planner decides that a query or part of it can be parallelized, it adds a Gather node within the parallelizable portion of the plan and makes a gather root node of that subtree. Query execution starts at the process (leader) level and all the serial parts of the plan are run by the leader. However, if parallelism is enabled and permissible for any part (or whole) of the query, then gather node with a set of workers is allocated for it. Workers are the threads that run in parallel with part of the tree (partial-plan) that needs to be parallelized. The relation’s blocks are divided amongst threads such that the relation remains sequential. The number of threads is governed by settings as set in PostgreSQL’s configuration file. The workers coordinate/communicate using shared memory, and once workers have completed their work, the results are passed on to the leader for accumulation.

Parallel Sequential Scans

In PostgreSQL 9.6, support for the parallel sequential scan was added. A sequential scan is a scan on a table in which a sequence of blocks is evaluated one after the other. This, by its very nature, allows parallelism. So this was a natural candidate for the first implementation of parallelism. In this, the whole table is sequentially scanned in multiple worker threads. Here is the simple query where we query the pgbench_accounts table rows (63165) which has 1500000000 tuples. The total execution time is 4343080ms. As there is no index defined, the sequential scan is used. The whole table is scanned in a single process with no thread. Therefore the single core of CPU is used regardless of how many cores are available.

db=# EXPLAIN ANALYZE SELECT *

FROM pgbench_accounts

WHERE abalance > 0;

QUERY PLAN

----------------------------------------------------------------------

Seq Scan on pgbench_accounts (cost=0.00..73708261.04 rows=1 width=97)

(actual time=6868.238..4343052.233 rows=63165 loops=1)

Filter: (abalance > 0)

Rows Removed by Filter: 1499936835

Planning Time: 1.155 ms

Execution Time: 4343080.557 ms

(5 rows)What if these 1,500,000,000 rows scanned parallel using “10” workers within a process? It will reduce the execution time drastically.

db=# EXPLAIN ANALYZE select * from pgbench_accounts where abalance > 0;

QUERY PLAN

----------------------------------------------------------------------

Gather (cost=1000.00..45010087.20 rows=1 width=97)

(actual time=14356.160..1628287.828 rows=63165 loops=1)

Workers Planned: 10

Workers Launched: 10

-> Parallel Seq Scan on pgbench_accounts

(cost=0.00..45009087.10 rows=1 width=97)

(actual time=43694.076..1628068.096 rows=5742 loops=11)

Filter: (abalance > 0)

Rows Removed by Filter: 136357894

Planning Time: 37.714 ms

Execution Time: 1628295.442 ms

(8 rows)Now the total execution time is 1628295ms; this is a 266% improvement while using 10 workers thread used to scan.

Query used for the Benchmark: SELECT * FROM pgbench_accounts WHERE abalance > 0;

Size of Table: 426GB

Total Rows in Table: 1500000000

The system used for the Benchmark:

CPU: 2 Intel(R) Xeon(R) CPU E5-2643 v2 @ 3.50GHz

RAM: 256GB DDR3 1600

DISK: ST3000NM0033

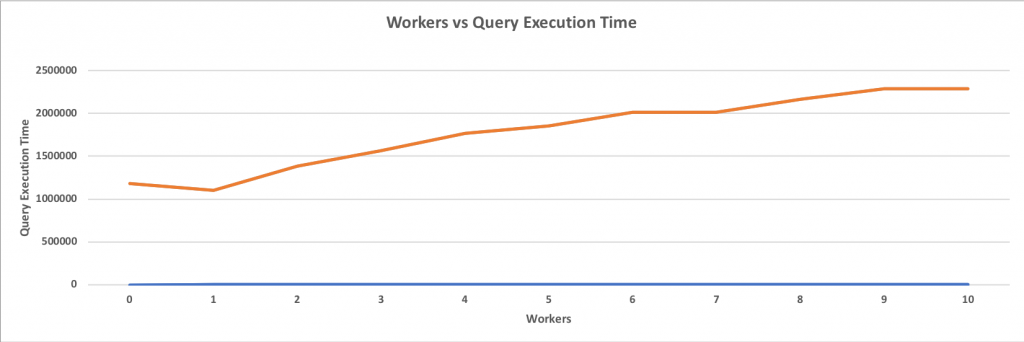

The above graph clearly shows how parallelism improves performance for a sequential scan. When a single worker is added, the performance understandably degrades as no parallelism is gained, but the creation of an additional gather node and a single work adds overhead. However, with more than one worker thread, the performance improves significantly. Also, it is important to note that performance doesn’t increase in a linear or exponential fashion. It improves gradually until the addition of more workers will not give any performance boost; sort of like approaching a horizontal asymptote. This benchmark was performed on a 64-core machine, and it is clear that having more than 10 workers will not give any significant performance boost.

Parallel Aggregates

In databases, calculating aggregates are very expensive operations. When evaluated in a single process, these take a reasonably long time. In PostgreSQL 9.6, the ability to calculate these in parallel was added by simply dividing these in chunks (a divide and conquer strategy). This allowed multiple workers to calculate the part of aggregate before the final value(s) based on these calculations was calculated by the leader. More technically speaking, PartialAggregate nodes are added to a plan tree, and each PartialAggregate node takes the output from one worker. These outputs are then emitted to a FinalizeAggregate node that combines the aggregates from multiple (all) PartialAggregate nodes. So effectively, the parallel partial plan includes a FinalizeAggregate node at the root and a Gather node which will have PartialAggregate nodes as children.

db=# EXPLAIN ANALYZE SELECT count(*) from pgbench_accounts;

QUERY PLAN

----------------------------------------------------------------------

Aggregate (cost=73708261.04..73708261.05 rows=1 width=8)

(actual time=2025408.357..2025408.358 rows=1 loops=1)

-> Seq Scan on pgbench_accounts (cost=0.00..67330666.83 rows=2551037683 width=0)

(actual time=8.162..1963979.618 rows=1500000000 loops=1)

Planning Time: 54.295 ms

Execution Time: 2025419.744 ms

(4 rows)Following is an example of a plan when an aggregate is to be evaluated in parallel. You can clearly see performance improvement here.

db=# EXPLAIN ANALYZE SELECT count(*) from pgbench_accounts; QUERY PLAN ---------------------------------------------------------------------- Finalize Aggregate (cost=45010088.14..45010088.15 rows=1 width=8) (actual time=1737802.625..1737802.625 rows=1 loops=1) -> Gather (cost=45010087.10..45010088.11 rows=10 width=8) (actual time=1737791.426..1737808.572 rows=11 loops=1) Workers Planned: 10 Workers Launched: 10 -> Partial Aggregate (cost=45009087.10..45009087.11 rows=1 width=8) (actual time=1737752.333..1737752.334 rows=1 loops=11) -> Parallel Seq Scan on pgbench_accounts (cost=0.00..44371327.68 rows=255103768 width=0) (actual time=7.037..1731083.005 rows=136363636 loops=11) Planning Time: 46.031 ms Execution Time: 1737817.346 ms (8 rows)

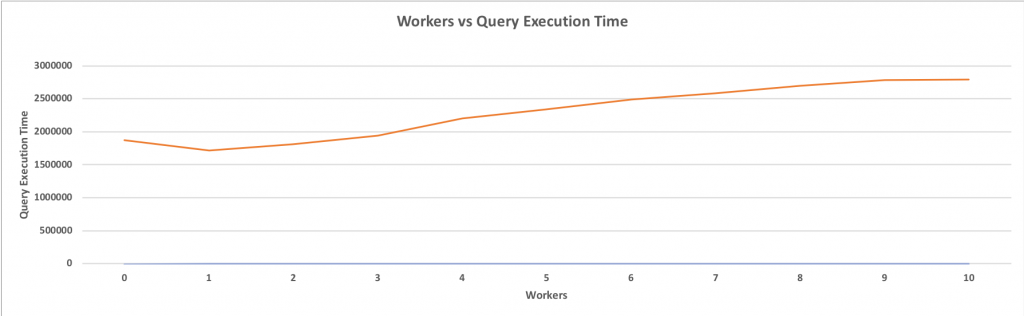

With parallel aggregates, in this particular case, we get a performance boost of just over 16% as the execution time of 2025419.744 is reduced to 1737817.346 when 10 parallel workers are involved.

Query used for the Benchmark: SELECT count(*) FROM pgbench_accounts WHERE abalance > 0;

Size of Table: 426GB

Total Rows in Table: 1500000000

The system used for the Benchmark:

CPU: 2 Intel(R) Xeon(R) CPU E5-2643 v2 @ 3.50GHz

RAM: 256GB DDR3 1600

DISK: ST3000NM0033

Parallel Index (B-Tree) Scans

The parallel support for B-Tree index means index pages are scanned in parallel. The B-Tree index is one of the most used indexes in PostgreSQL. In a parallel version of B-Tree, a worker scans the B-Tree and when it reaches its leaf node, it then scans the block and triggers the blocked waiting worker to scan the next block.

Confused? Let’s look at an example of this. Suppose we have a table foo with id and name columns, with 18 rows of data. We create an index on the id column of table foo. A system column CTID is attached with each row of table which identifies the physical location of the row. There are two values in the CTID column: the block number and the offset.

postgres=# SELECT ctid, id FROM foo; ctid | id --------+----- (0,55) | 200 (0,56) | 300 (0,57) | 210 (0,58) | 220 (0,59) | 230 (0,60) | 203 (0,61) | 204 (0,62) | 300 (0,63) | 301 (0,64) | 302 (0,65) | 301 (0,66) | 302 (1,31) | 100 (1,32) | 101 (1,33) | 102 (1,34) | 103 (1,35) | 104 (1,36) | 105 (18 rows)

Let’s create the B-Tree index on that table’s id column.

CREATE INDEX foo_idx ON foo(id)

Suppose we want to select values where id <= 200 with 2 workers. Worker-0 will start from the root node and scan until the leaf node 200. It’ll handover the next block under node 105 to Worker-1, which is in a blocked and wait-state. If there are other workers, blocks are divided into the workers. A similar pattern is repeated until the scan is completed.

Parallel Bitmap Scans

To parallelize a bitmap heap scan, we need to be able to divide blocks among workers in a way very similar to parallel sequential scan. To do that, a scan on one or more indexes is done and a bitmap indicating which blocks are to be visited is created. This is done by a leader process, i.e. this part of the scan is run sequentially. However, the parallelism kicks in when the identified blocks are passed to workers, the same way as in a parallel sequential scan.

Parallel Joins

Parallelism in the merge joins support is also one of the hottest features added in this release. In this, a table joins with other tables’ inner loop hash or merge. In any case, there is no parallelism supported in the inner loop. The entire loop is scanned as a whole, and the parallelism occurs when each worker executes the inner loop as a whole. The results of each join sent to gather accumulate and produce the final results.

Summary

It is obvious from what we’ve already discussed in this blog that parallelism gives significant performance boosts for some, slight gains for others, and may cause performance degradation in some cases. Ensure that parallel_setup_cost or parallel_tuple_cost are set up correctly to enable the query planner to choose a parallel plan. Even after setting low values for these GUIs, if a parallel plan is not produced, refer to the PostgreSQL documentation on parallelism for details.

For a parallel plan, you can get per-worker statistics for each plan node to understand how the load is distributed amongst workers. You can do that through EXPLAIN (ANALYZE, VERBOSE). As with any other performance feature, there is no one rule that applies to all workloads. Parallelism should be carefully configured for whatever the need may be, and you must ensure that the probability of gaining performance is significantly higher than the probability of a drop in performance.

Viorel Tabara: Cloud Vendor Deep-Dive: PostgreSQL on AWS Aurora

How deep should we go with this? I’ll start by saying that as of this writing, I could locate only 3 books on Amazon about PostgreSQL in the cloud, and 117 discussions on PostgreSQL mailing lists about Aurora PostgreSQL. That doesn’t look like a lot, and it leaves me, the curious PostgreSQL end user, with the official documentation as the only place where I could really learn some more. As I don’t have the ability, nor the knowledge to adventure myself much deeper, there is AWS re:Invent 2018 for those who are looking for that kind of thrill. I can settle for Werner’s article on quorums.

To get warmed up, I started from the Aurora PostgreSQL homepage where I noted that the benchmark showing that Aurora PostgreSQL is three times faster than a standard PostgreSQL running on the same hardware dates back to PostgreSQL 9.6. As I’ve learned later, 9.6.9 is currently the default option when setting up a new cluster. That is very good news for those who don’t want to, or cannot upgrade right away. And why only 99.99% availability? One explanation can be found in Bruce Momjian’s article.

Compatibility

According to AWS, Aurora PostgreSQL is a drop-in replacement for PostgreSQL, and the documentation states:

The code, tools, and applications you use today with your existing MySQL and PostgreSQL databases can be used with Aurora.

That is reinforced by Aurora FAQs:

It means that most of the code, applications, drivers and tools you already use today with your PostgreSQL databases can be used with Aurora with little or no change. The Amazon Aurora database engine is designed to be wire-compatible with PostgreSQL 9.6 and 10, and supports the same set of PostgreSQL extensions that are supported with RDS for PostgreSQL 9.6 and 10, making it easy to move applications between the two engines.

“most” in the above text suggests that there isn’t a 100% guarantee in which case those seeking certainty should consider purchasing technical support from either AWS Professional Services, or Aamazon Aurora partners. As a side note, I did notice that none of the PostgreSQL professional Hosting Providers employing core community contributors are on that list.

From Aurora FAQs page we also learn that Aurora PostgreSQL supports the same extensions as RDS, which in turn lists most of the community extensions and a few extras.

Concepts

As part of Amazon RDS, Aurora PostgreSQL comes with its own terminology:

- Cluster: A Primary DB instance in read/write mode and zero or more Aurora Replicas. The primary DB is often labeled a Master in `AWS diagrams`_, or Writer in the AWS Console. Based on the reference diagram we can make an interesting observation: Aurora writes three times. As the latency between the AZs is typically higher than within the same AZ, the transaction is considered committed as soon it's written on the data copy within the same AZ, otherwise the latency and potential outages between AZs.

- Cluster Volume: Virtual database storage volume spanning multiple AZs.

- Aurora URL: A `host:port` pair.

- Cluster Endpoint: Aurora URL for the Primary DB. There is one Cluster Endpoint.

- Reader Endpoint: Aurora URL for the replica set. To make an analogy with DNS it's an alias (CNAME). Read requests are load balanced between available replicas.

- Custom Endpoint: Aurora URL to a group consisting of one or more DB instances.

- Instance Endpoint: Aurora URL to a specific DB instance.

- Aurora Version: Product version returned by `SELECT AURORA_VERSION();`.

PostgreSQL Performance and Monitoring on AWS Aurora

Sizing

Aurora PostgreSQL applies a best guess configuration which is based on the DB instance size and storage capacity, leaving further tuning to the DBA through the use of DB Parameters groups.

When selecting the DB instance, base your selection on the desired value for max_connections.

Scaling

Aurora PostgreSQL features auto and manual scaling. Horizontal scaling of read replicas is automated through the use of performance metrics. Vertical scaling can be automated via APIs.

Horizontal scaling takes the offline for a few minutes while replacing compute engine and performing any maintenance operations (upgrades, patching). Therefore AWS recommend performing such operations during maintenance windows.

Scaling in both directions is a breeze:

At the storage level, space is added in 10G increments. Allocated storage is never reclaimed, see below for how to address this limitation.

Storage

As mentioned above, Aurora PostgreSQL was engineered to take advantage of quorums in order to improve performance consistency.

Since the underlying storage is shared by all DB instances within the same cluster, no additional writes on standby nodes are required. Also, adding or removing DB instances doesn’t change the underlying data.

Wondering what those IOs units mean on the monthly bill? Aurora FAQs comes to the rescue again to explain what an IO is, in the context of monitoring and billing. A Read IO as the equivalent of an 8KiB database page read, and a Write IO as the equivalent of 4KiB written to the storage layer.

High Concurrency

In order to take full advantage of Aurora’s high-concurrency design, it is recommended that applications are configured to drive a large number of concurrent queries and transactions.

Applications designed to direct read and write queries to respectively standby and primary database nodes will benefit from Aurora PostgreSQL reader replica endpoint.

Connections are load balanced between read replicas.

Using custom endpoints database instances with more capacity can be grouped together in order to run an intensive workload such as analytics.

DB Instance Endpoints can be used for fine-grained load balancing or fast failover.

Note that in order for the Reader Endpoints to load balance individual queries, each query must be sent as a new connection.

Caching

Aurora PostgreSQL uses a Survivable Cache Warming technique which ensures that the date in the buffer cache is preserved, eliminating the need for repopulating or warming-up the cache following a database restart.

Replication

Replication lag time between replicas is kept within single digit millisecond. Although not available for PostgreSQL, it’s good to know that cross-region replication lag is kept within 10s of milliseconds.

According to documentation replica lag increases during periods of heavy write requests.

Query Execution Plans

Based on the assumption that query performance degrades over time due to various database changes, the role of this Aurora PostgreSQL component is to maintain a list of approved or rejected query execution plans.

Plans are approved or rejected using either proactive or reactive methods.

When an execution plan is marked as rejected, the Query Execution Plan overrides the PostgreSQL optimizer decisions and prevents the “bad” plan from being executed.

This feature requires Aurora 2.1.0 or later.

PostgreSQL High Availability and Replication on AWS Aurora

At the storage layer, Aurora PostgreSQL ensures durability by replicating each 10GB of storage volume, six times across 3 AZs (each region consists of typically 3 AZs) using physical synchronous replication. That makes it possible for database writes to continue working even when 2 copies of data are lost. Read availability survives the loss of 3 copies of data.

Read replicas ensure that a failed primary instance can be quickly replaced by promoting one of the 15 available replicas. When selecting a multi-AZ deployment one read replica is automatically created. Failover requires no user intervention, and database operations resume in less than 30 seconds.

For single-AZ deployments, the recovery procedure includes a restore from the last known good backup. According to Aurora FAQs the process completes in under 15 minutes if the database needs to be restored in a different AZ. The documentation isn’t that specific, claiming that it takes less than 10 minutes to complete the restore process.

No change is required on the application side in order to connect to the new DB instance as the cluster endpoint doesn’t change during a replica promotion or instance restore.

Step 1: delete the primary instance to force a failover:

Step 2: automatic failover completed

For busy databases, the recovery time following a restart or crash is dramatically reduced since Aurora PostgreSQL doesn’t need to replay the transaction logs.

As part of full-managed service, bad data blocks and disks are automatically replaced.

Failover when replicas exist takes up to 120 seconds with often time under 60 seconds. Faster recovery times can be achieved by when failover conditions are pre-determined, in which case replicas can be assigned failover priorities.

Aurora PostgreSQL plays nice with Amazon RDS – an Aurora instance can act as a read replica for a primary RDS instance.

Aurora PostgreSQL supports Logical Replication which, just like in the community version, can be used to overcome built-in replication limitations. There is no automation or AWS console interface.

Security for PostgreSQL on AWS Aurora

At network level, Aurora PostgreSQL leverages AWS core components, VPC for cloud network isolation and Security Groups for network access control.

There is no superuser access. When creating a cluster, Aurora PostgreSQL creates a master account with a subset of superuser permissions:

postgres@pg107-dbt3medium-restored-cluster:5432 postgres> \du+ postgres

List of roles

Role name | Attributes | Member of | Description

-----------+-------------------------------+-----------------+-------------

postgres | Create role, Create DB +| {rds_superuser} |

| Password valid until infinity | |To secure data in transit, Aurora PostgreSQL provides native SSL/TLS support which can be configured per DB instance.

All data at rest can be encrypted with minimal performance impact. This also applies to backups, snapshots, and replicas.

Authentication is controlled by IAM policies, and tagging allows further control over what users are allowed to do and on what resources.

API calls used by all cloud services are logged in CloudTrail.

Client side Restricted Password Management is available via the rds.restrict_password_commands parameter.

PostgreSQL Backup and Recovery on AWS Aurora

Backups are enabled by default and cannot be disabled. They provide point-in-time-recovery using a full daily snapshot as a base backup.

Restoring from an automated backup has a couple of disadvantages: the time to restore may be several hours and data loss may be up to 5 minutes preceding the outage. Amazon RDS Multi-AZ Deployments solve this problem by promoting a read replica to primary, with no data loss.

Database Snapshots are fast and don’t impact the cluster performance. They can be copied or shared with other users.

Taking a snapshot is almost instantaneous:

Restoring a snapshot is also fast. Compare with PITR:

Backups and snapshots are stored in S3 which offers eleven 9’s of durability.

Aside from backups and snapshots, Aurora PostgreSQL allows databases to be cloned. This is an efficient method for creating copies of large data sets. For example, cloning multi-terabytes of data take only minutes and there is no performance impact.

Aurora PostgreSQL - Point-in-Time Recovery Demo

Connecting to cluster:

~ $ export PGUSER=postgres PGPASSWORD=postgres PGHOST=s9s-us-east-1.cluster-ctfirtyhadgr.us-east-1.rds.amazonaws.com

~ $ psql

Pager usage is off.

psql (11.3, server 10.7)

SSL connection (protocol: TLSv1.2, cipher: ECDHE-RSA-AES256-GCM-SHA384, bits: 256, compression: off)

Type "help" for help.Populate a table with data:

postgres@s9s-us-east-1:5432 postgres> create table s9s (id serial not null, msg text, created timestamptz not null default now());

CREATE TABLE

postgres@s9s-us-east-1:5432 postgres> select * from s9s;

id | msg | created

----+------+-------------------------------

1 | test | 2019-06-25 07:57:40.022125+00

2 | test | 2019-06-25 07:57:57.666222+00

3 | test | 2019-06-25 07:58:05.593214+00

4 | test | 2019-06-25 07:58:08.212324+00

5 | test | 2019-06-25 07:58:10.156834+00

6 | test | 2019-06-25 07:59:58.573371+00

7 | test | 2019-06-25 07:59:59.5233+00

8 | test | 2019-06-25 08:00:00.318474+00

9 | test | 2019-06-25 08:00:11.153298+00

10 | test | 2019-06-25 08:00:12.287245+00

(10 rows)Initiate the restore:

Once the restore is complete log in and check:

~ $ psql -h pg107-dbt3medium-restored-cluster.cluster-ctfirtyhadgr.us-east-1.rds.amazonaws.com

Pager usage is off.

psql (11.3, server 10.7)

SSL connection (protocol: TLSv1.2, cipher: ECDHE-RSA-AES256-GCM-SHA384, bits: 256, compression: off)

Type "help" for help.

postgres@pg107-dbt3medium-restored-cluster:5432 postgres> select * from s9s;

id | msg | created

----+------+-------------------------------

1 | test | 2019-06-25 07:57:40.022125+00

2 | test | 2019-06-25 07:57:57.666222+00

3 | test | 2019-06-25 07:58:05.593214+00

4 | test | 2019-06-25 07:58:08.212324+00

5 | test | 2019-06-25 07:58:10.156834+00

6 | test | 2019-06-25 07:59:58.573371+00

(6 rows)Best Practices

Monitoring and Auditing

- Integrate database activity streams with third party monitoring in order to monitor database activity for compliance and regulatory requirements.

- A fully-managed database service doesn’t mean lack of responsibility — define metrics to monitor the CPU, RAM, Disk Space, Network, and Database Connections.

- Aurora PostgreSQL integrates with AWS standard monitoring tool CloudWatch, as well as providing additional monitors for Aurora Metrics, Aurora Enhanced Metrics, Performance Insight Counters, Aurora PostgreSQL Replication, and also for RDS Metrics that can be further grouped by RDS Dimensions.

- Monitor Average Active Sessions DB Load by Wait for signs of connections overhead, SQL queries that need tuning, resource contention or an undersized DB instance class.

- Setup Event Notifications.

- Configure error log parameters.

- Monitor configuration changes to database cluster components: instances, subnet groups, snapshots, security groups.

Replication

- Use native table partitioning for workloads that exceed the maximum DB instance class and storage capacity

Encryption

- Encrypted database must have backups enabled to ensure data can be restored in case the encryption key is revoked.

Master Account

- Do not use psql to change the master user password.

Sizing

- Consider using different instance classes in a cluster in order to reduce costs.

Parameter Groups

- Fine tune using Parameter Groups in order to save $$$.

Parameter Groups Demo

Current settings:

postgres@s9s-us-east-1:5432 postgres> show shared_buffers ;

shared_buffers

----------------

10112136kB

(1 row)Create a new parameter group and set the new cluster wide value:

Associate the custom parameter group with the cluster:

Reboot the writer and check the value:

postgres@s9s-us-east-1:5432 postgres> show shared_buffers ;

shared_buffers

----------------

1GB

(1 row)- Set the local timezone

By default, the timezone is in UTC:

postgres@s9s-us-east-1:5432 postgres> show timezone;

TimeZone

----------

UTC

(1 row)Setting the new timezone:

And then check:

postgres@s9s-us-east-1:5432 postgres> show timezone;

TimeZone

------------

US/Pacific

(1 row)Note that the list of timezone values accepted by Amazon Aurora is not the timezonesets found in upstream PostgreSQL.

- Review instance parameters that are overridden by cluster parameters

- Use the parameter group comparison tool.

Snapshots

- Avoid additional storage charges by sharing the snapshots with another accounts to allow restoring into their respective environments.

Maintenance

- Change the default maintenance window according to organization schedule.

Failover

- Improve recovery time by configuring the Cluster Cache Management.

- Lower the kernel TCP keepalive values on the client and configure the application DNS cache and TTL, and PostgreSQL connection strings.

DBA Beware!

In addition to the known limitations avoid, or be aware of the following:

Encryption

- Once a database has been created the encryption state cannot be changed.

Aurora Serverless

- At this time, the PostgreSQL version of Aurora Serverless is only available in limited preview.

Parallel Query

- Amazon Parallel Query is not available, although the feature with the same name is available since PostgreSQL 9.6.

Endpoints

From Amazon Connection Management:

- 5 Custom Endpoints per cluster

- Custom Endpoint names cannot exceed 63 characters

- Cluster Endpoint names are unique within the same region

- As seen in the above screenshot (aurora-custom-endpoint-details) READER and ANY custom endpoint types aren’t available, use the CLI

- Custom Endpoints are unaware of replicas becoming temporarily unavailable

Replication

- When promoting a Replica to Primary, connections via the Reader Endpoint may continue to be directed for a brief time to the promoted Replica.

- Cross-region Replicas are not supported

- While released at the end of November 2017, the Amazon Aurora Multi-Master preview is still not available for PostgreSQL

- Watch for performance degradation when logical replication is enabled on the cluster.

- Logical Replication requires a published running PostgreSQL engine 10.6 or later.

Storage

- Maximum allocated storage does not shrink when data is deleted, neither is space reclaimed by restoring from snapshots. The only way to reclaim space is by performing a logical dump into a new cluster.

Backup and Recovery

- Backups retention isn’t extended while the cluster is stopped.

- Maximum retention period is 35 days— use manual snapshots for a longer retention period.

- point-in-time recovery restores to a new DB cluster.

- brief interruption of reads during failover to replicas.

- Disaster Recovery scenarios are not available cross-region.

Snapshots

- Restoring from snapshot creates a new endpoint (snapshots can only be restored to a new cluster).

- Following a snapshot restore, custom endpoints must be recreated.

- Restoring from snapshots resets the local timezone to UTC.

- Restoring from snapshots does not preserve the custom security groups.

- Snapshots can be shared with a maximum of 20 AWS account IDs.

- Snapshots cannot be shared between regions.

- Incremental snapshots are always copied as full snapshots, between regions and within the same region.

- Copying snapshots across regions does not preserve the non-default parameter groups.

Billing

- The 10 minutes bill applies to new instances, as well as following a capacity change (compute, or storage).

Authentication

- Using IAM database authentication imposes a limit on the number of connections per second.

- The master account has certain superuser privileges revoked.

Starting and Stopping

From Overview of Stopping and Staring an Aurora DB Cluster:

- Clusters cannot be left stopped indefinitely as they are started automatically after 7 days.

- Individual DB instances cannot be stopped.

Upgrades

- In-place major version upgrades are not supported.

- Parameter group changes for both DB instance and DB cluster take at least 5 minutes to propagate.

Cloning

- 15 clones per database (original or copy).

- Clones are not removed when deleting the source database.

Scaling

- Auto-Scaling requires that all replicas are available.

- There can be only `one auto-scaling policy`_ per metric per cluster.

- Horizontal scaling of the primary DB instance (instance class) is not fully automatic. Before scaling the cluster triggers an automatic failover to one of the replicas. After scaling completes the new instance must be manually promoted from reader to writer:

![New instance left in reader mode after DB instance class change.]() New instance left in reader mode after DB instance class change.

New instance left in reader mode after DB instance class change.

Monitoring

- Publishing PostgreSQL logs to CloudWatch requires a minimum database engine version of 9.6.6 and 10.4.

- Only some Aurora metrics are available in the RDS Console and other metrics have different names and measurement units.

- By default, Enhanced Monitoring logs are kept in CloudWatch for 30 days.

- Cloudwatch and Enhanced Monitoring metrics will differ, as they gather data from the hypervisor and respectively the agent running on the instance.

- Performance Insights_ aggregates the metrics across all databases within a DB Instance.

- SQL statements are limited to 500 characters when viewed with AWS Performance Insights CLI and API.

Migration

- Only RDS unencrypted DB Snapshots can be encrypted at rest.

- Migrations using the Aurora Read Replica technique take several hours per TiB.

Sizing

- The smallest available instance class is db.t3.medium and the largest db.r5.24xlarge. For comparison, the MySQL engine offers db.t2.small and db.t2.medium, however no db.r5.24xlarge in the upper range.

- max_connections upper limit is 262,143.

Query Plan Management

- Statements inside PL/pgSQL functions are unsupported.

Migration

Aurora PostgreSQL does not provide direct migration services, rather the task is offloaded to a specialized AWS product, namely AWS DMS.

Conclusion

As a fully-managed drop-in replacement for the upstream PostgreSQL, Amazon Aurora PostgreSQL takes advantage of the technologies that power the AWS cloud to remove the complexity required to setup services such as auto-scaling, query load-balancing, low-level data replication, incremental backups, and encryption.

The architecture and a conservative approach for upgrading the PostgreSQL engine provides the performance and the stability organizations from small to large are looking for.

The inherent limitations are just a proof that building a large scale Database as a Service is a complex task, leaving the highly specialized PostgreSQL Hosting Providers with a niche market they can tap into.

Avinash Kumar: Using plpgsql_check to Find Compilation Errors and Profile Functions

There is always a need for profiling tools in databases for admins or developers. While it is easy to understand the point where an SQL is spending more time using

There is always a need for profiling tools in databases for admins or developers. While it is easy to understand the point where an SQL is spending more time using EXPLAIN

or EXPLAIN ANALYZE

in PostgreSQL, the same would not work for functions. Recently, Jobin has published a blog post where he demonstrated how plprofiler can be useful in profiling functions.

plprofilerbuilds call graphs and creates flame graphs which make the report very easy to understand. Similarly, there is another interesting project called

plpgsql_checkwhich can be used for a similar purpose as

plprofiler, while it also looks at code and points out compilation errors. Let us see all of that in action, in this blog post.

Installing plpgsql-check

You could use yum on RedHat/CentOS to install this extension from PGDG repository. Steps to perform source installation on Ubuntu/Debian are also mentioned in the following logs.

On RedHat/CentOS

$ sudo yum install plpgsql_check_11

On Ubuntu/Debian

$ sudo apt-get install postgresql-server-dev-11 libicu-dev gcc make $ git clone https://github.com/okbob/plpgsql_check.git $ cd plpgsql_check/ $ make && make install

Creating and enabling this extension

There are 3 advantages of using

plpgsql_check

- Checking for compilation errors in a function code

- Finding dependencies in functions

- Profiling functions

When using plpgsql_check for the first 2 requirements, you may not need to add any entry to

shared_preload_libraries. However, if you need to use it for profiling functions (3), then you should add appropriate entries to

shared_preload_librariesso that it could load both

plpgsqland

plpgsql_check. Due to dependencies,

plpgsqlmust be before

plpgsql_checkin the

shared_preload_librariesconfig as you see in the following example :

shared_preload_libraries = plpgsql, plpgsql_check

Any change to

shared_preload_librariesrequires a restart. You may see the following error when you do not have

plpgsqlbefore

plpgsql_checkin the

shared_preload_librariesconfig.

$ grep "shared_preload_libraries" $PGDATA/postgresql.auto.conf shared_preload_libraries = 'pg_qualstats, pg_stat_statements, plpgsql_check' $ pg_ctl -D /var/lib/pgsql/11/data restart -mf waiting for server to shut down.... done server stopped waiting for server to start....2019-07-07 02:07:10.104 EDT [6423] FATAL: could not load library "/usr/pgsql-11/lib/plpgsql_check.so": /usr/pgsql-11/lib/plpgsql_check.so: undefined symbol: plpgsql_parser_setup 2019-07-07 02:07:10.104 EDT [6423] LOG: database system is shut down stopped waiting pg_ctl: could not start server Examine the log output. $ grep "shared_preload_libraries" $PGDATA/postgresql.auto.conf shared_preload_libraries = 'pg_qualstats, pg_stat_statements, plpgsql, plpgsql_check' $ pg_ctl -D /var/lib/pgsql/11/data start ..... server started

Once the PostgreSQL instance is started, create this extension in the database where you need to perform any of the previously discussed 3 tasks.

$ psql -d percona -c "CREATE EXTENSION plpgsql_check" CREATE EXTENSION

Finding Compilation Errors

As discussed earlier, this extension can help developers and admins determine compilation errors. But why is it needed? Let’s consider the following example where we see no errors while creating the function. By the way, I have taken this example from my previous blog post where I was talking about Automatic Index recommendations using

hypopgand

pg_qualstats. You might want to read that blog post to understand the logic of the following function.

percona=# CREATE OR REPLACE FUNCTION find_usable_indexes()

percona-# RETURNS VOID AS

percona-# $$

percona$# DECLARE

percona$# l_queries record;

percona$# l_querytext text;

percona$# l_idx_def text;

percona$# l_bef_exp text;

percona$# l_after_exp text;

percona$# hypo_idx record;

percona$# l_attr record;

percona$# /* l_err int; */

percona$# BEGIN

percona$# CREATE TABLE IF NOT EXISTS public.idx_recommendations (queryid bigint,

percona$# query text, current_plan jsonb, recmnded_index text, hypo_plan jsonb);

percona$# FOR l_queries IN

percona$# SELECT t.relid, t.relname, t.queryid, t.attnames, t.attnums,

percona$# pg_qualstats_example_query(t.queryid) as query

percona$# FROM

percona$# (

percona$# SELECT qs.relid::regclass AS relname, qs.relid AS relid, qs.queryid,

percona$# string_agg(DISTINCT attnames.attnames,',') AS attnames, qs.attnums

percona$# FROM pg_qualstats_all qs

percona$# JOIN pg_qualstats q ON q.queryid = qs.queryid

percona$# JOIN pg_stat_statements ps ON q.queryid = ps.queryid

percona$# JOIN pg_amop amop ON amop.amopopr = qs.opno

percona$# JOIN pg_am ON amop.amopmethod = pg_am.oid,

percona$# LATERAL

percona$# (

percona$# SELECT pg_attribute.attname AS attnames

percona$# FROM pg_attribute

percona$# JOIN unnest(qs.attnums) a(a) ON a.a = pg_attribute.attnum

percona$# AND pg_attribute.attrelid = qs.relid

percona$# ORDER BY pg_attribute.attnum) attnames,

percona$# LATERAL unnest(qs.attnums) attnum(attnum)

percona$# WHERE NOT

percona$# (

percona$# EXISTS

percona$# (